the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Statistical reconstruction of European winter snowfall in reanalysis and climate models based on air temperature and total precipitation

Flavio Maria Emanuele Pons

Davide Faranda

The description and analysis of compound extremes affecting mid- and high latitudes in the winter requires an accurate estimation of snowfall. This variable is often missing from in situ observations and biased in climate model outputs, both in the magnitude and number of events. While climate models can be adjusted using bias correction (BC), snowfall presents additional challenges compared to other variables, preventing one from applying traditional univariate BC methods. We extend the existing literature on the estimation of the snowfall fraction from near-surface temperature, which usually involves binary thresholds or nonlinear least square fitting of sigmoidal functions. We show that, considering methods such as segmented and spline regressions and nonlinear least squares fitting, it is possible to obtain accurate out-of-sample estimates of snowfall over Europe in ERA5 reanalysis and to perform effective BC on the IPSL_WRF high-resolution EURO-CORDEX climate model when only relying on bias-adjusted temperature and precipitation. In particular, we find that cubic spline regression offers the best tradeoff as a feasible and accurate way to reconstruct or adjust snowfall observations, without requiring multivariate or conditional bias correction and stochastic generation of unobserved events.

- Article

(15423 KB) - Full-text XML

- BibTeX

- EndNote

Despite the expectations of less frequent snow events in a warming climate, there are still several motivations to study trends in future snowfall. First of all, snowfall extremes can still have a great impact on economy and society. Recent snowfall over large, populated areas of France in February 2018 and in Italy in January 2017 caused transport disruption, several casualties, and economic damage. Snow is also an important hydrological quantity and crucial for the tourism industry of some countries. Although climate models predict a general reduction in snowfall amounts due to global warming, accurate estimates of this decline heavily depend on the considered model. Moreover, while this prediction is valid at the global scale, there may be regional exceptions, with northern areas receiving more snowfall during the winter. Large discrepancies in snowfall amounts indeed exist for observational or reanalysis datasets: in detecting recent trends in extreme snowfall events, Faranda (2020) has also investigated the agreement between the ERA5 reanalysis and the E-OBSv20.0e gridded observations in representing snowfall. Since direct snowfall measurements were not available for E-OBSv20.0e, all precipitation that occurred on days where the average temperature was below 2 ∘C was considered as snowfall. Faranda (2020) found that observed trends and the agreement in absolute value between the two datasets largely depended on the considered region. Overall, the climatologies of snowfall provided by the two datasets had similar ranges, although ERA5 tended to overestimate snowfall compared to E-OBSv20.0e. Even though such a binary separation of snowfall, using a temperature threshold, seemed a good option to retrieve snowfall data from E-OBSv20.0e, it has obvious limitations. For example, in an event characterized by abundant precipitation but a temperature associated to a roughly 50 % snow fraction, snowfall would be either strongly under- or overestimated. In this paper, we explore the possibility of reconstructing snowfall from bias-corrected temperature and precipitation via adequate statistical methods to obtain an improved estimate compared to raw snowfall simulation from climate models.

Climate models are the primary tool to simulate multi-decadal climate dynamics and to generate and understand global climate change projections under different future emission scenarios. Both regional and global climate models have coarse resolution and contain several physical and mathematical simplifications that make the simulation of the climate system computationally feasible but also introduce a certain level of approximation. This results in biases that can be easily observed when comparing the simulated climate to observations or reanalysis datasets. Therefore, they provide limited actionable information at the regional and local spatial scales. To circumvent this problem, it is of crucial importance to correct these biases for impact and adaptation studies and for the assessment of meteorological extreme events in a climate perspective (see, e.g., Ayar et al., 2016; Grouillet et al., 2016).

In order to mitigate the aforementioned biases, a bias correction (BC) step is usually performed. This step usually consists of a methodology designed to adjust specific statistical properties of the simulated climate variables towards a validated reference dataset in the historical period. The chosen statistics can be very simple, e.g., mean and variance, or it may include dynamical features in time, such as a certain number of lags of the autocorrelation function for time series data, it can be constructed using a limited number of moments or aim at correcting the entire probability distribution of the variable, and the correction can also be carried out in the frequency domain so that the entire time dependence structure is preserved. For an overview of various BC methodologies applied to climate models see, for example, Teutschbein and Seibert (2012, 2013) and Maraun (2016). Key efforts have also been made to bias correct precipitation (Vrac et al., 2016) and for multivariate (i.e., multi-sites and variables) approaches, both in downscaling and BC contexts (Vrac and Friederichs, 2015; Vrac, 2018).

Despite the effort devoted to correcting precipitation bias, only a few studies propose specific BC methods for snowfall data from climate projections. For instance, Frei et al. (2018) propose a bias adjustment of snowfall in EURO-CORDEX models specific to the Alpine region, involving altitude-adjusted total precipitation and a single threshold temperature to separate rain and snow. Krasting et al. (2013) study snowfall in CMIP5 models at the northern hemispheric level, highlighting biases but without suggesting any methodology to reduce them, while Lange (2019) proposes a quantile mapping approach that can be used for univariate BC of snowfall.

Indeed, snowfall presents additional challenges compared to other variables, precluding the use of traditional univariate BC methods. Besides the intermittent and non-smooth nature of snowfall fields – a feature in common with total precipitation – snowfall is the result of complex processes which involve not only the formation of precipitation but also the existence and persistence of thermal and hygrometric conditions that allow the precipitation to reach the ground in the solid state. As a result, snow is often observed in a mixed phase with rain, especially when considering daily data. This phase transition poses additional challenges to the bias correction of snowfall, namely the need of separating the snow fraction, using the available meteorological information. Ideally, methods to perform such a separation, also known as precipitation-phase partitioning methods, should be based on wet-bulb temperature, to which the snow fraction is particularly sensitive (Ding et al., 2014). However, due to the difficulty in estimating this parameter in the case of climate models, the task is usually performed by relying on temperature data. These issues would require the application of multivariate BC methodologies, which are significantly more complex than their univariate counterparts and whose applicability is not yet fully understood (François et al., 2020).

In the following, T denotes the mean daily near-surface temperature, Ptot the total daily precipitation, SF the total daily snowfall, fs denotes the snow fraction, and fr the rain fraction of total precipitation.

The fact that T is an effective predictor of fs was first observed by Murray (1952). This study tried to also link the precipitation phase to other variables, such as the freezing level and the thickness of pressure difference layers (1000–700 and 1000–500 hPa), finding that the near-surface temperature alone is as effective at predicting the snow fraction as the others. Following this result, several authors suggest the use of a binary separation of the snow fraction based on a threshold temperature both in climatological (US Army Corps of Engineers, 1956; de Vries et al., 2014; Zubler et al., 2014; Schmucki et al., 2015) and hydrological (Bergström and Singh, 1995; Kite, 1995) studies. In this setting, a threshold temperature T* is chosen, so that the fraction fs of total precipitation falling as snow is 1 for below and 0 for .

In a hydrological modeling context, Pipes and Quick (1977) proposed a linear interpolation between two threshold temperatures . This method provides a simple, yet more realistic, representation of the relationship between the snow fraction and near-surface temperature, which often resembles an inverse S-shaped curve. L'hôte et al. (2005) find similar S-shaped relationships over the Alps and the Andes, pointing to a broad validity of such an assumption; several other instances of research finding evidence of such a relationship are also mentioned in the following paragraph. An important limitation of this method is that the thresholds Tlow and Thigh are fixed. As reported by Kienzle (2008), Pipes and Quick (1977) use threshold values C and C to estimate snowfall in the U.S. Wen et al. (2013) point out that a similar method had already been implemented by US Army Corps of Engineers (1956), using three different threshold values; this adds parameters to the statistical model, which then requires even more fine-tuning before being applied to prediction. In more recent years, the double threshold method has also been applied to climatological analysis, for example in McCabe and Wolock (2008, 2009), where the authors stress that the choice of the thresholds require an important calibration procedure step. Still in the class of threshold models, Kienzle (2008) uses a parameterization considering a temperature TT at which the precipitation is half rain and half snow and a temperature range TR within which both phases co-exist. This method requires validation, using reliable and sub-daily station data, making it less suited for the characterization of gridded snowfall over large domains in reanalysis or climate models.

Slightly more complex methods aim at reproducing the quasi-smooth shape of the precipitation-phase transition by fitting S-shaped functions to the relationship between T and fs (or fr). For example, Dai (2008) proposes a hyperbolic tangent , while McAfee et al. (2014) choose a logistic function , both fitted via nonlinear least squares (NLSs). Harder and Pomeroy (2013) propose a similar procedure, adopted also by Pan et al. (2016), based on a sigmoidal function , where b and c are parameters calibrated using data from a single location, and Ti is the so-called hydrometeor temperature, i.e., the temperature at the surface of a falling hydrometeor, which is defined by Harder and Pomeroy (2013) as a function of air temperature and humidity. While Harder and Pomeroy (2013) find that this method provides more accurate results compared to simple and double thresholds, the estimation of Ti requires reliable measurements or predictions of relative humidity, making this technique more suited to treating observational data.

Wen et al. (2013) present a comparison of some of these methods. In particular, this study examined the model-generated snowfall using different models (namely a regional model with no atmosphere–surface coupling forced by observations and a coupled regional circulation model with land interaction) and assumed forms of fs. The methods put to the test are single threshold (with T* taken to be 0 and 2.5 ∘C), double threshold, both with the parameter values fixed by US Army Corps of Engineers (1956) and Pipes and Quick (1977), and the method proposed by Kienzle (2008) and the nonlinear relationship specified by Dai (2008). Results are mixed, with different methods performing differently in the two models. It is worth stressing that Wen et al. (2013) do not tune the parameterizations, nor do they assess whether the chosen single threshold is optimal for the considered datasets.

We stress that other studies highlight a dependency of the snow fraction on additional variables. For example, Jennings et al. (2018) find a strong sensitivity of fs to relative humidity, which is already included in the partitioning method by Harder and Pomeroy (2013). Dai (2008) and Jennings et al. (2018) also find a dependency on air pressure, pointing out the role of elevation in determining the optimal threshold temperature. Behrangi et al. (2018) find that the accuracy of phase partitioning models is increased by including wet-bulb and dew point temperature, pressure, and wind speed. We are not concerned by the role of elevation, since we estimate grid-point-specific statistical models, which automatically account for this effect. Concerning relative humidity and other thermodynamic quantities such as dew point and wet-bulb temperatures, we are aware of their role in determining the precipitation phase. However, our goal is to compare methods suitable for application to climate projection datasets. The inclusion of further variables would require obtaining and performing an effective bias correction on a much larger volume of data. We limit our analysis to a minimal set of variables that can significantly increase the accuracy of climate models, compared to unadjusted snowfall output.

We aim at finding a feasible method that allows for the accurate estimation of fs as a function of T and then of in gridded time series datasets, overcoming the drawbacks of the methodologies applied so far in the literature. We do so, first, by proposing a method to detect candidate values for the threshold temperature(s) in an automated and computationally feasible way, and second, by fitting nonlinear functions that can incorporate the threshold value(s) with or without parametric assumptions (such as hyperbolic tangent or logistic functions). In this way, we aim at ensuring sufficient flexibility to apply results from these methods to out-of-sample data, while adopting very simple statistical specifications. Our goal is to find one or more methodologies that allow us to reconstruct snowfall over large domains, including regions where this phenomenon is relatively rare, and occasional extremes can cause service disruption, damage, economic loss, and loss of life.

The rest of the paper is organized as follows: in Sect. 2, we describe the datasets used for statistical model specification and assessment of the snowfall reconstruction performance. In Sect. 3, we illustrate the statistical modeling of the snow fraction, and in Sect. 4, we discuss methods to evaluate and compare statistical methods. In Sect. 5, we present the results obtained on the considered datasets, including case studies on four regions characterized by different snowfall mechanisms and climatologies. In Sect. 6, we discuss the main results, and in Sect. 7, we report our conclusions.

2.1 The ERA5 reanalysis dataset

Most of the hydrological and climatological studies cited in Sect. 1 are focused on limited areas where snowfall is a recurrent phenomenon. In general, it is possible to find high-quality snowfall data for areas heavily affected by frequent snowfall, such as Scandinavian countries and the Alpine region (Auer et al., 2005; Scherrer and Appenzeller, 2006; Isotta et al., 2014), which, however, can still suffer from the lack of reliable data at high altitudes (Beaumet et al., 2020). On the other hand, good quality snow data at the synoptic scale are in general difficult to obtain (Rasmussen et al., 2012).

To match the goals defined in the previous section, we decide to rely on a gridded reanalysis dataset at the European scale to specify and validate our snowfall method, rather than on observational data from limited areas.

In particular, we use the fifth generation reanalysis product (ERA5) provided by the European Centre for Medium-Range Weather Forecast (ECMWF). This dataset has a high (0.25∘) horizontal resolution over Europe and state-of-the-art physical parameterizations (Copernicus Climate Change Service, 2017) over the period 1979–2005. We consider daily data over a domain covering the area between 26 and 70∘ N and between −22 to 46∘ E, consisting of a lat–long grid of 273×177 points covering Europe, part of the eastern Atlantic, and parts of Russia and northern Africa. We only include days from December, January, and February (DJF) in our analysis.

In most reanalysis and climate simulation models, snowfall is represented as snowfall flux (SF) in (Copernicus Climate Change Service, 2017, see also https://esgf-node.ipsl.upmc.fr, last access: 22 August 2022, for CMIP5, CMIP6, and EURO-CORDEX variable lists), from which it is easy to retrieve total snowfall through time integration. Here we consider SF expressed in m d−1 of equivalent water depth, considering that 1 kg m−2 corresponds to 10−3 m of water equivalent. This quantity is relevant for hydrologists, as it is closely related to runoff and river discharge, but also for climatologists, since it represents the intensity of the phenomenon well.

Snowfall in ERA5 consists of snow produced by both large-scale atmospheric flow and convective precipitations. It measures the total amount of water accumulated during the considered time step as the depth of the water resulting if all the snow melted and was spread evenly over the grid box.

We aggregate the hourly ERA5 data into daily values to match the time step of the climate simulations described in the following. We choose ERA5 as the dataset for our study because of its physical consistency and the use of advanced assimilation techniques for its compilation (Faranda, 2020).

2.2 Historical climate simulation

In this paper, we use outputs of a climate projection model from the EURO-CORDEX project obtained by nesting the regional circulation model (RCM) IPSL-WRF381P within the r1i1p1 variant of the IPSL-IPSL-CM5A-MR global circulation model (GCM) from CMIP5. The RCM results are presented at a 0.11∘ regular grid, while the GCM is given at a resolution of 2∘ for the period 1950–2100. However, model outputs considered in our analysis are presented at a 0.25∘ resolution, matching the ERA5 grid. Models are run under different Representative Concentration Pathway (RCP) scenarios (namely RCP2.6, 4.5, and 8.5); in our analysis, we will only include DJF data for the historical sub-period of 1979–2005. See Vautard et al. (2020) and Coppola et al. (2021) for more details about the EURO-CORDEX ensemble.

The relevant variables are T, Ptot, and SF, sampled at a daily time step. The datasets are freely available via the Earth System Grid Federation (ESGF) nodes (https://esgf.llnl.gov/nodes.html, last access: 22 August 2022). Both T and Ptot are available in a bias-adjusted version based on ERA5, using the cumulative distribution function transform (CDF-t) introduced by Vrac et al. (2012) and further developed by Vrac et al. (2016) to improve the adjustment of precipitation frequency. CDF-t is a distribution mapping method, frequently chosen in studies that involve climate projections, as they perform better than methods based on linear transformations in case of changing future conditions (Teutschbein and Seibert, 2013).

In the following, we show how statistical modeling of SF based on bias-adjusted Ptot and T can replace the direct BC of the snowfall, markedly improving model SF statistics with respect to the reference data. For both ERA5 and IPSL_WRF, we apply a binary land–sea mask to only consider snowfall over the continents.

In this section, we describe a set of candidate statistical models for the snow fraction fs as a function of the near-surface temperature T and how we compare their performance in terms of accurate reconstruction of the snowfall in an out-of-sample test set.

First, we consider the single threshold method (STM) as our baseline method. Given the spatial extent of our dataset and the relatively fine grid resolution, we anticipate that more refined methods could be better suited to the purpose of climatological analysis of snowfall. In particular, we aim at finding parsimonious models that can be easily fitted, pointwise, on the grid, producing location-specific parameter estimates that we may exploit to obtain an accurate approximation of snowfall using T and Ptot.

In order to do so, we explore different statistical models. We extend the STM to a more flexible framework, consisting of two steps. First, for each grid point we analyze the relationship between T and fs, and we exploit a breakpoint (or change-point) search algorithm to assess whether two, one, or no thresholds should be assumed to describe the rain–snow transition as a function of near-surface temperature. Then, we rely on regression to fit grid-point-specific statistical models of the snow fraction, incorporating the information about threshold temperatures. Furthermore, we explore spline regression with knots, based on the local probability distribution of T, which makes it possible to fit any nonlinear function without the initial breakpoint search step. Finally, we consider a method based on directly fitting a parametric S-shaped function to the data, as in Dai (2008). This statistical model can be fitted pointwise, carrying out the parameter estimation using NLS.

As a final remark, given their ready availability in bias-adjusted form, we estimated the presented regression models including also 850 hPa air temperature and total precipitation as explanatory variables of the snow fraction. However, including these additional explanatory variables did not significantly improve the goodness of fit or prediction skills of the regression models. For this reason, we only present and discuss results of one-dimensional methods considering near-surface temperature.

3.1 Single threshold model (STM)

First of all, we assess the results obtained applying the STM, introduced by Murray (1952), as follows:

As already discussed in Sect. 1, this method has been used in both hydrological and climatological contexts for almost 70 years, until the present. The most difficult step of this specification, and the greatest drawback of the method, is the choice of the threshold temperature itself.

Despite its simplicity, this technique presents some advantages. First, if T* is accurately chosen to preserve long-run snow totals in the reference dataset, snow totals in the climate model are also not expected to be severely biased. Moreover, this method can potentially represent extreme DJF snowfall in cold climates better than more complex methods, since it is very likely that important snowfall episodes happen below the threshold daily temperature and correspond to events during which the totality of daily precipitation falls as snow. On the other hand, heavy wet-snowfall with disruptive effects is a well-known phenomenon in temperate climates (Nikolov and Wichura, 2009; Bonelli et al., 2011; Llasat et al., 2014; Ducloux and Nygaard, 2014).

However, this method is also naive, as it gives a binary representation of a quantity continuously varying in [0, 1]. This makes it impossible for the results to provide insights on snowfall features in case of more in-depth climatological analysis or more refined hydrological models. Furthermore, the search for the optimal threshold should not be complex or computationally expensive, otherwise it invalidates the advantage of using such simplified assumptions. This does not prevent us from detecting a representative value of T* when conducing a site-specific or local-scale study, but a single value of the threshold extracted from the literature or estimated, considering the whole aggregated dataset, can be a gross approximation in case of gridded high-resolution data on a wide domain such as the EURO-CORDEX one.

Estimates reported in the literature for the single threshold range across quite different values. For example, Auer Jr. (1974) finds the optimal temperature to give a binary representation of the snowfall to be 2.5∘C after analyzing station data in the U.S., but values as low as 0∘C are reported by Wen et al. (2013), while Jennings et al. (2018) find a mean threshold of 1∘C for the northern hemisphere over the period 1978–2007. In a recent analysis of snowfall trends over Europe in the last few decades, based on ERA5 and E-OBS data, Faranda (2020) suggests a threshold of C, also finding that any threshold between 0 and 2.5∘C does not significantly change the overall results in that specific study, which focused on observed trends in snowfalls during the last few decades.

However, it is worth mentioning that the results by Faranda (2020) refer to snowfall over Europe where spatial averaging is applied at regional or country level. It is possible that different thresholds in the interval 0–2.5∘C are more suited for different parts of the domain, but the errors cancel out in the spatial averaging, thus not showing the sensitivity to the threshold value in terms of long run statistics. This condition does not hold if the reconstruction of the snowfall must be carried out to preserve spatial structures, and in general, we do not expect to be able to obtain an accurate representation of the snow fraction using a single value for the threshold over the whole domain. On the other hand, we can still expect this method to perform conveniently when considering long-term spatially averaged statistics but also right-tail extremes in cold climates or elevated locations. Here, extreme snowfall leading to important snow accumulation on the ground is expected to be concurrent with large daily precipitation and low temperature and then with high values of fs. Choosing a threshold above the freezing point and considering any precipitation happening with any daily mean temperature lower than such thresholds is conservative in terms of the estimation of extreme events, despite its inadequacy in terms of reproducing more complex features of snowfall.

In the following, we discuss a methodology that encompasses the case of a locally selected threshold temperature, while enabling us to determine the optimal number of thresholds and their respective values for each point of the considered domain.

3.2 Segmented logit-linear regression

In order to overcome the limitations of the STM of fs, we aim at reproducing the potentially nonlinear relationship between T and fs. We propose a way to extend the method by Pipes and Quick (1977), using the following two-step approach:

- i.

determine the optimal number m of thresholds temperatures for each grid point and their value, using a breakpoint search algorithm, and

- ii.

in each of the m+1 regimes corresponding to the estimated m thresholds, we describe the relationship between T and fs using a logit-linear regression.

In Appendix A, we describe the search algorithm used to determine the optimal number of threshold temperatures at each grid point. It is worth mentioning that we also considered a segmented beta regression with a logit link function, which would be the most theoretically sound way of modeling a [0,1] bounded variable. However, this algorithm showed several convergence problems, which ultimately made it unfeasible.

3.3 Cubic spline regression

While segmented logit-linear regression allows for more flexible functional forms compared to a STM, it is based on the specific assumption of a piecewise logit-linear relationship between T and fs. In order to try and catch such nonlinearity without imposing possibly unrealistic assumptions, we consider cubic spline regression. The procedure, described in Appendix A, relies on piece-wise polynomials; the deciles of the point-specific temperature distribution are taken as the knots defining the partition, making this procedure able to capture nonlinearity and, thus, avoiding the need for a breakpoint search as in segmented regression.

3.4 Sigmoid function fit

Finally, following Dai (2008) and Jennings et al. (2018), we consider an inverse S-shaped or sigmoid function to directly fit the transition. This method links snow fraction and near-surface temperature through a hyperbolic tangent and features the following four parameters:

At each grid point, we estimate the parameter values using the R function nls(), based on the NL2SOL port algorithm (Gay, 1990). We consider two versions of this statistical model. In one, we estimate the value of all four parameters, using as initial values . In the other, hereinafter named the constrained sigmoid, we fix prior to the estimation, and we only estimate b and c. This choice followed the observation that estimating all four parameters results in unphysical behaviors for extremely low and high temperatures, where the sigmoid should approach 1 and 0, respectively.

We assess the performance of each method in recovering fs as a function of T and then , using a test set from ERA5 for statistical method evaluation. Then, we select the best method, if any is found, as the one providing the out-of-sample best prediction of snowfall in ERA5, and we use parameter estimates to approximate SF in the IPSL_WRF model, assessing its performance in terms of bias correction with respect to the original model output. In the following, the superscripts E5 and IW denote ERA5 and IPSL_WRF variables, respectively. Moreover, the hat superscript denotes estimations obtained from the regression models. For example, denotes the total precipitation in ERA5, and denotes the snow fraction estimated from T in the IPSL_WRF climate simulations. If no superscript is shown, we refer to the indicated variable in general. Furthermore, as already mentioned, denote the threshold temperatures obtained estimated by the segmented regression algorithm. Finally, we use θ to denote the generic parameter vector of each method, including β and the temperature thresholds and for the corresponding estimate.

As a preparatory step, we transform the data so that they are included in (0,1) without assuming the boundary values. In fact, the segmented regression on logit-transformed data is ill-behaved in case the variable assumes the limiting values of 0 and 1. To circumvent this problem, Smithson and Verkuilen (2006) propose a transformation to effectively shrink the interval as follows:

where n is the sample size. Notice that the interval amplitude will depend on n. For example, for n=50 the interval reduces to [0.01, 0.99], while for n=100 it ranges in [0.005, 0.995]. In the following, all variables are transformed using Eq. (2) for regression models but not for the STM and the sigmoid fit. Since we consider this adjustment as part of the regression procedure, no different notation will be used to denote the transformed variables.

For the statistical model selection and validation steps, we use the entire available period from 1979 to 2005. For the STM, we test two threshold temperatures T*, namely C, following Jennings et al. (2018), and C, following Faranda (2020). Then, we put , if , and , if . To estimate snow fraction models based on regression, for each point on the ERA5 grid, if the total number of snowfall events is a least n=30, we randomly select half of the values as a training set, and the remaining is used as a test set; otherwise, the grid point is excluded from the analysis. Pure rainfall events are also excluded from regression analysis. Each method is evaluated on the training set to obtain the parameter estimate (with the exception of the STM). Then, is used to estimate in the test set, and we finally obtain the estimated snowfall as .

The performance of the method is assessed by comparing true and predicted fs values in the test set. In particular, for each grid point, we compute two error measures, the mean absolute error (MAE), and the root mean square error (RMSE), as follows:

We consider the best method to be the one providing the best performance in terms of minimum MAE and RMSE over the considered domain. We repeat the estimation for each grid point using the entire sample size n, instead of dividing it into a training and test set. Then, we use the resulting and TIW to estimate the snow fraction in the climate model, , and the corresponding snowfall . Then, we compare both SFIW and to SFE5, and we assess whether the version estimated with the chosen method and using bias-adjusted total precipitation is closer to reanalysis than the raw climate model output, showing that the statistical method can be used to perform snowfall BC.

The capability of the chosen methods to perform a BC-like task can be evaluated in terms of similarity between the distribution of the estimated daily snowfall and the reanalysis values. We will use three measures of dissimilarity between the distributions, i.e., the Kolmogorov–Smirnov (KS) statistics, the Kullback–Leibler (KL) divergence, and the χ2 divergence. See Sect. A2 for an illustration of these quantities.

5.1 Threshold temperatures

The breakpoint analysis for the search of optimal threshold temperatures is described in detail in Sect. A1. For each grid point in the ERA5 dataset, we run the search algorithm, saving the number of resulting breakpoint positions, while the values are discarded, since the segmented regression algorithm only requires the number of breaks as an input. We exclude all grid points where the total number of DJF snowfall events over 1979–2005 is smaller than 30, which is also the cutoff we chose as a minimal sample size for the regressions.

It is worth noting that at least one threshold temperature is found for each grid point, even though having no breakpoint is an admissible outcome from the search algorithm. This corroborates the idea that some form of transition between two regimes is to be expected concerning the relationship between T and fs.

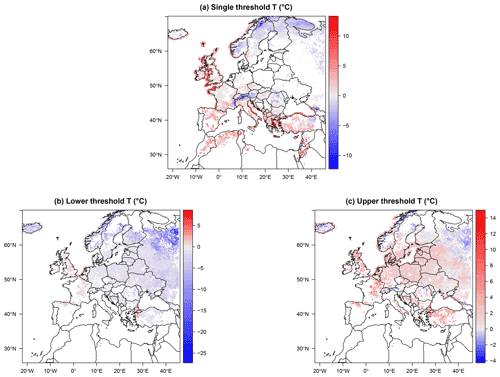

As mentioned in Sect. 3.2, and illustrated in detail in Appendix A, the segmented regression algorithm finds the optimal threshold temperatures through Eq. (A11), using quantiles of the independent variable as initial guess. The results of this threshold temperature estimation are shown in Fig. 2.

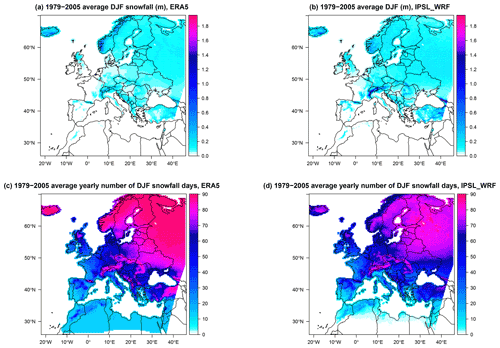

Figure 1The (a, b) 1979–2005 average DJF snowfall (m) and (c, d) 1979–2005 number of DJF snowfall days. The left column shows ERA5, and the right column shows IPSL_WRF.

Figure 2Threshold temperatures optimized for segmented logit-linear regression, according to Eq. (A11), using ERA5 data. (a) Locations with a single threshold temperature. (b, c) Locations with double threshold temperatures, with lower thresholds (b) and upper thresholds (c) also shown.

Figure 2a displays the locations and temperature values where only one threshold is found. These are also likely locations where the choice of a STM may produce good results. However, it is clear from the figure that the values of the threshold are quite variable, displaying negative values on some areas, as opposed to the usual choice for a STM framework. In particular, strongly negative values (up to ∘C) are found over the Alps and Scandinavia, and slightly milder but still negative values are also observed over parts of eastern Türkiye, Austria, the Czech Republic, Slovakia, Hungary, Bulgaria, Romania, and Moldova. All these areas are characterized by a large or extremely large number of snowfall events (Fig. 1b), but only the Alps and eastern Türkiye are also areas with a large average DJF snowfall. The other two regions characterized by extreme total snowfall amounts, Iceland and the coast of Norway, with the exception of the most southwestern part, are instead not represented by this single threshold configuration. Thresholds ∘C are found over central–western France and Belgium, while positive values, more typical for single threshold methods are found for the UK, Spain, southern France and Germany, peninsular Italy, and the northern parts of Morocco, Algeria, and Tunisia. Panels (b) and (c) in Fig. 2 show, respectively, and for all the remaining grid points, characterized by a double threshold. These areas include Iceland and central–eastern Europe at all latitudes, excluding the aforementioned areas characterized by single thresholds. Concerning , we observe temperatures ∘C in the UK, eastern France, and central Europe, decreasing towards negative values over more northern and eastern locations, including Iceland. A similar gradient is observed for the upper threshold , with positive temperatures over UK and most of central–eastern Europe, and values closer to 0 ∘C in southern Finland and western Russia. Areas displaying unrealistically negative values for both thresholds over Iceland, coastal Norway, and northern Russia are likely areas characterized by a steep transition and noisy data, preventing the algorithm from detecting realistic threshold values.

5.2 Statistical method evaluation and selection

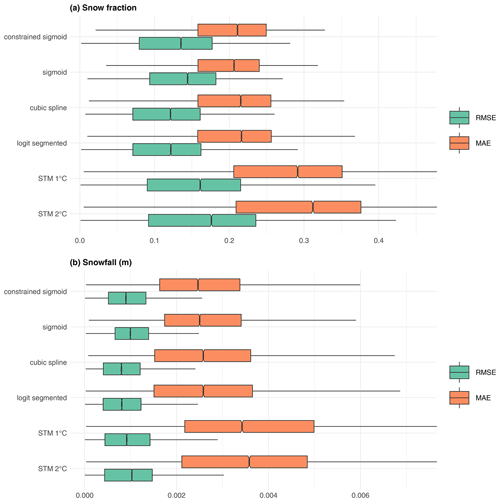

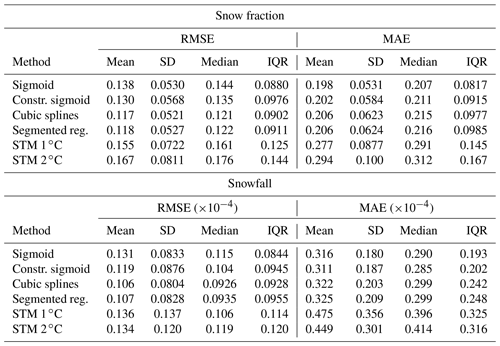

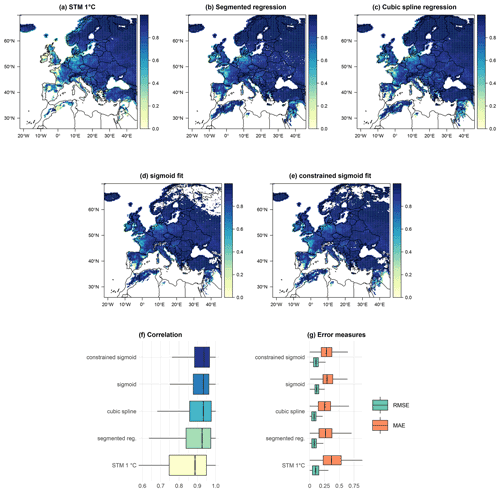

We assess the performance of each method in terms of snow fraction prediction, as described in Sect. 4. For every grid point in the ERA5 dataset, we randomly split the time series of snow fraction into a training and a test set, we estimate each statistical model in the training set, and we use parameter estimates to predict the values in the test set. In Fig. 3, we show a summary of the results in terms of the chosen error measures. Each box plot refers to the values of the error measure over the entire domain for grid points with more than 30 snowfall events. The tested methods include the STM with 1 and 2 ∘C threshold temperatures, the segmented logit-linear and the cubic spline regressions, and the sigmoid fit based on NLS, both with and without constraints on the parameters. We do not display outliers in the box plot for greater graphical clarity.

We show results for both the snow fraction, directly derived from the statistical prediction (Fig. 3a), and the snowfall, obtained as (Fig. 3b).

From a visual inspection, the STM produces the poorest performance in terms of median and variability of the errors, for both fs and SF. The other methods provide very similar results, with the two versions of the sigmoid showing slightly higher median RMSE and lower median MAE; the segmented logit-linear and the cubic spline regressions display no visible differences.

In order to choose the best possible methodology based on quantitative considerations, we test for significant differences among groups using the rank test proposed by Kruskal and Wallis (1952).

We perform a total of four Kruskal–Wallis tests on snow fraction RMSE, snow fraction MAE, snowfall RMSE, and snowfall MAE. In all cases, the null hypothesis must be rejected, with virtually null p values (all , which is the smallest value resolved in R). From this, we can infer that at least one method produces significantly smaller errors compared to at least another method. However, we cannot establish exactly which groups are concerned. For this purpose, we rely on post hoc testing using the pairwise Wilcoxon rank sum test (Wilcoxon, 1992), a nonparametric alternative to pairwise Student's t tests suited for non-Gaussian samples, also known as Mann–Whitney U test. See Sect. A3 for a detailed description of the statistical tests.

For fs, the test indicates significant differences among all groups, except between spline and segmented logit-linear regressions, (p values 0.34 and 0.60 for RMSE and MAE, respectively). For SF, the test gives results analogous to fs for RMSE (no difference between spline and segmented logit-linear regressions; p value =0.20), while, in terms of MAE, differences are nonsignificant also between the two versions of STM (p value =0.56), between spline and segmented logit-linear regression (p value =0.50), between sigmoid and segmented logit-linear regression (p value =0.12), and between sigmoid fit and spline regression (p value =0.34). It is worth mentioning that, due to the very large sample size, the tests may be sensitive even to very small differences, possibly leading to a high probability of rejection, even with differences that are unrecognizable in practice.

The close similarity of results from the segmented logit-linear and the spline regression is also evident from the summary statistics of the distributions of the two error measures for the two variables, as shown in Table 1. Based on these results, spline regression seems to be potentially the best candidate, as it shows the lowest error statistics, while being less demanding than segmented regression, as it does not require the breakpoint analysis step, which is the longest and most computationally intensive step among the presented procedures.

Table 1Summary statistics of the distributions of the RMSE and MAE for snow fraction and snowfall in ERA5. The mean, standard deviations, median, and interquartile range are shown.

Table 2Summary statistics of the distributions of correlation between ERA5 reanalysis and predicted snow fraction. Note: IQR indicates the amplitude of the interquartile range.

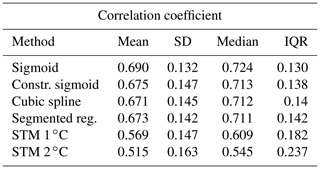

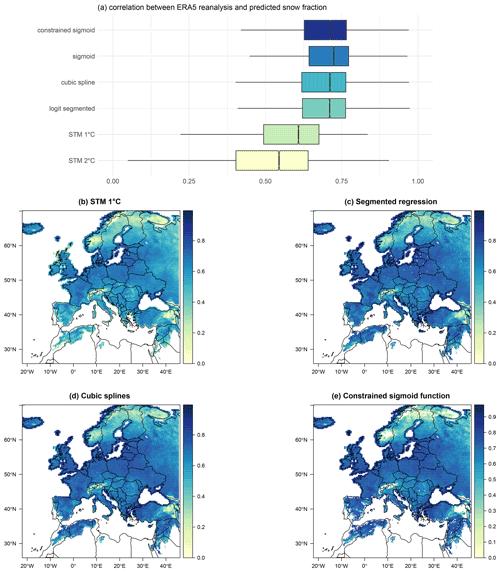

Figure 4Box plots of correlation coefficients between the ERA5 reanalysis and predicted snow fraction, using the five selected methods, for all grid points with at least 30 snowfall events (a). Mapped values of the snowfall correlation coefficient for the STM (b), segmented logit-linear regression (c), cubic spline regression (d), and constrained sigmoid fit (e). White areas over Scandinavia, the Alps, Türkiye and isolated points in Spain, southern France, and Italy shown in panel (e) are due to the non-convergence of the constrained sigmoid fit.

As a further criterion to choose the best-performing method, we consider the Pearson's correlation coefficient computed, at each grid point, between the snow fraction observed in ERA5 and predicted using the five methods under investigation. Notice that the factor linking fs and SF is for both true reanalysis and reconstructed data, so that results in terms of the correlation coefficient are exactly the same for both variables. The summary statistics for the correlation coefficient are shown in Table 2. The box plots, shown in Fig. 4a and constructed as described for MAE and RMSE, show that the STM with 2 ∘C displays the lowest correlation, followed by the 1 ∘C STM, segmented logit-linear regression, spline regression, constrained sigmoid, and unconstrained sigmoid fit. As in the previous case, the Kruskal–Wallis test finds a significant stochastic dominance of at least one group on the others, but the pairwise Mann–Whitney test suggests significant differences among all methods, except between segmented and spline regression (p value 0.9985).

Figure 4b–e show the spatial structure of correlation between predicted and observed snow fraction for all methods, except the STM with 2 ∘C threshold and the constrained sigmoid. Clearly, areas characterized by frequent and abundant winter snowfall, such as the Alps, Scandinavia, and eastern Türkiye, correspond to the lowest values of the correlation coefficient. It becomes evident that the slightly better performance of the unconstrained sigmoid fit is due to the non-convergence of the NLS algorithm over parts of the Alps, Norway, Sweden, Finland, and northern Russia, which are characterized by the lowest correlation values. This suggest that, while this method can perform as well as the segmented and spline regressions, it may be sensitive to misspecification, making it unsuitable for areas where the transition departs from an inverse S-shaped function. The constrained version does indeed show better convergence as it providing results very close to the logit-linear segmented and cubic spline regression.

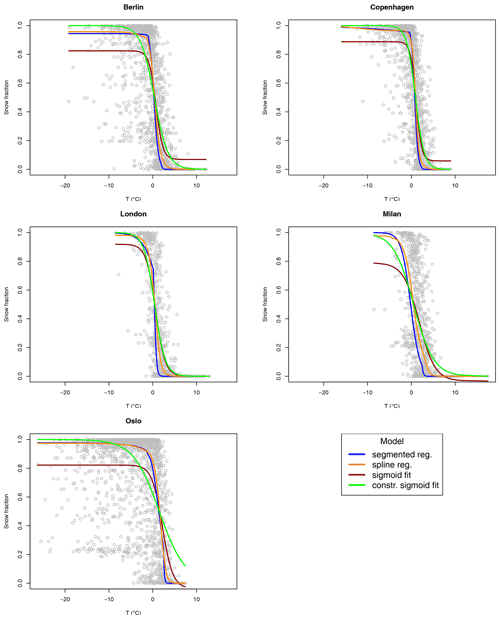

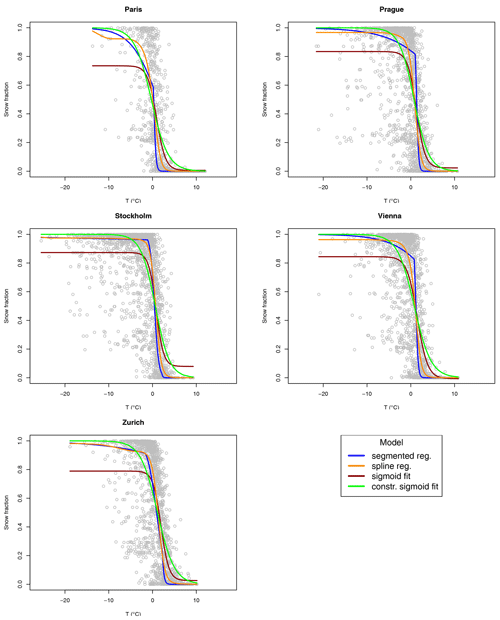

Figure 5Snow fraction as a function of temperature for the five ERA5 grid points closest to some European cities (gray circles). Solid lines represent fitted values for the segmented logit-linear regression (blue), spline regression (orange), sigmoid fit (dark red), and constrained sigmoid fit (green).

Figure 6Snow fraction as a function of temperature for the five ERA5 grid points closest to some European cities (gray circles). Solid lines represent fitted values for the segmented logit-linear regression (blue), spline regression (orange), sigmoid fit (dark red), and constrained sigmoid fit (green).

Figures 5 and 6 show the transition for a total of 10 locations corresponding to the closest grid points to some European capitals and the fits resulting from the segmented logit-linear regression, spline regression, and sigmoid fit, which are both constrained and unconstrained.

It is clear that the unconstrained sigmoid fit has some issues with extreme values of fs, especially in catching the saturation at fs=1 for very low values of T. The constrained version of the sigmoid fit is well-behaved at the extremes of the transition; however, in most of the shown locations, it is less accurate than the logit-linear segmented and cubic spline regression in following the overall transition.

As expected, fitted values from segmented and spline regressions are quite close, with the splines giving the smoothest result, since they are continuous at the knots by construction.

It is clear that, overall, the segmented logit-linear regression and the spline regression perform significantly better at reconstructing the snowfall compared to the STM. However, these results do not constitute strong evidence towards a better performance in reconstructing snowfall in practical cases, i.e., when it is unobserved or severely biased. To this purpose, we will apply both methods to reconstruct the snowfall in a climate projection model, and we assess which one produces the least biased snowfall using bias-adjusted temperature and precipitation as an input.

As an additional element to evaluate the performance of the identified methods, we assess if they can produce robust snowfall estimates in a pseudo climate change scenario. In order to do so, we repeat the validation procedure described in Sect. 4; after ordering the ERA5 dataset based on the annual DJF average temperature, we take the coldest 25 % as the training set and the warmest 25 % as the test set. We run this procedure for all methods, except the 2 ∘C STM, which clearly showed the poorest performance and will not be considered again in the following.

Figure 7Performance of the statistical methods for the prediction of snow fraction, trained on the 25 % coldest ERA5 years, in forecasting in the 25 % warmest years. (a–e) Maps of Pearson's correlation coefficient between prediction and reanalysis. (f, g) Comparison of the box plots of the correlation coefficient and of the statistical method performance in terms of RMSE and MAE, respectively. White points over northern Europe and Russia indicate non-convergence of the two sigmoid fits.

Figure 7 shows the statistical model performance metrics in analogy with Figs. 3 and 4. Figure 7a–e display the map of the event-to-event correlation coefficient, showing overall higher values than for the random training and test sets, which is arguably due to more frequent snowfall in the top 25 % coldest years providing more training data. On the other hand, both versions of the sigmoid fit exhibit convergence problems over a larger number of grid points, despite still being limited to the Alps, Scandinavia, and Russia, with the worst performance provided by the unconstrained fit.

Similar to the case of randomly drawn train and test sets, the STM shows the lowest correlations, followed by the segmented logit-linear and spline regressions, as shown by the box plot in Fig. 7f. The sigmoid fits display less variability in the lower tail of the box plot, probably thanks to the less dramatic lowering in correlation over western France, especially when compared to the spline regression. The performance in terms of RMSE and MAE (Fig. 7g) is also comparable to the random train and test sets case, with cubic splines performing the best.

Overall, assuming that separating cold and warm years can be a proxy of climate change to assess statistical model performance, the two regressions perform very similarly to the general case in terms of forecasting error, without any visible improvement or decrease in accuracy. As expected, the STM has the worst performance, while the sigmoid fit could present advantages over some areas but more pronounced convergence problems where DJF precipitation mainly falls as snow. However, we observe an improvement in the correlation between predicted and true forecasting values. We argue that this effect is likely due to precipitation patterns in years characterized by extreme temperatures in the historical period, and it should not be expected to happen under future climate change.

5.3 Bias correction of climate simulations

We now assess the performance of the considered methods on the output of the IPSL_WRF climate simulations for the period 1979–2005. For this RCM, we have near-surface temperature and total daily precipitation bias-corrected using CDF-t with respect to ERA5. However, available snowfall data are not corrected, and this presents non-negligible differences compared to ERA5 in terms of both the long run statistics and probability distribution of daily snowfall.

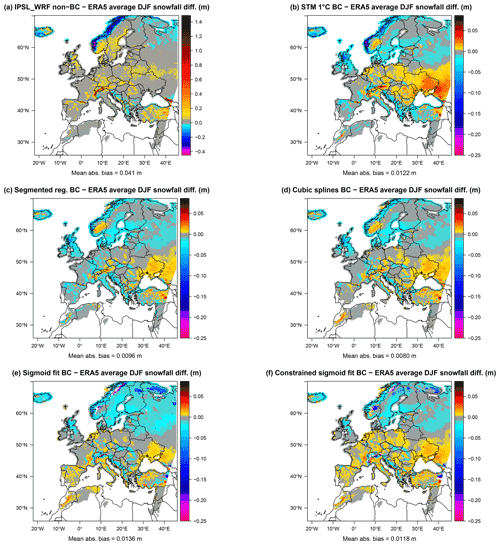

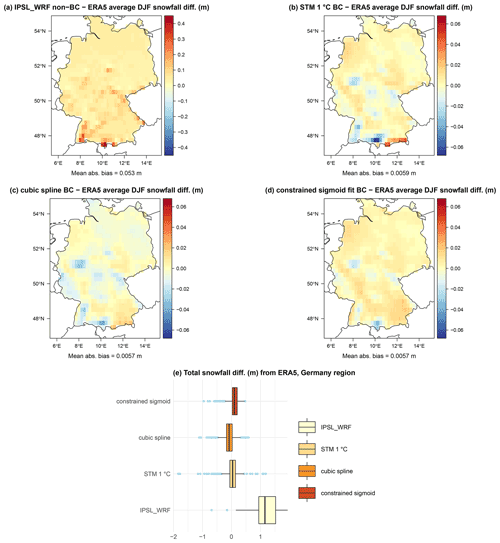

Figure 8Difference in meters between the statistical approximation and reanalysis average of DJF snowfall for the years 1979–2005. The IPSL_WRF model (a), 1 ∘C STM (b), segmented logit-linear regression (c), cubic spline regression (d), unconstrained (e), and constrained sigmoid function (f) are shown.

Figure 8 shows the differences in average DJF snowfall between IPSL_WRF and ERA5 and between each statistical method and ERA5. The RCM displays larger biases compared to the statistical methods, especially the positive values over the Alps and negative bias over the coast of Norway and, with smaller values, over western Iceland, the Balkans, and northern Russia. Overall, all methods produce a decrease in snowfall bias, including the STM.

It is also worth noting the different spatial distribution of the bias sign, depending on the method. The two versions of the sigmoid fit are the only methods that avoid the positive bias patch over Norway; however, the unconstrained sigmoid is characterized by larger areas of negative bias between Scandinavia and Russia, while the constrained version produces more positively biased values over central Europe.

To assess the overall performance of the models, we compute the mean absolute bias as the spatial mean of the absolute differences between modeled and ERA5 DJF snowfall differences. The cubic splines produce the smallest bias (0.008 m), followed by segmented regression (0.0096 m), constrained sigmoid (0.0118 m), STM (0.0122 m), unconstrained sigmoid (0.0136 m), and non-bias-corrected IPSL_WRF (0.041 m).

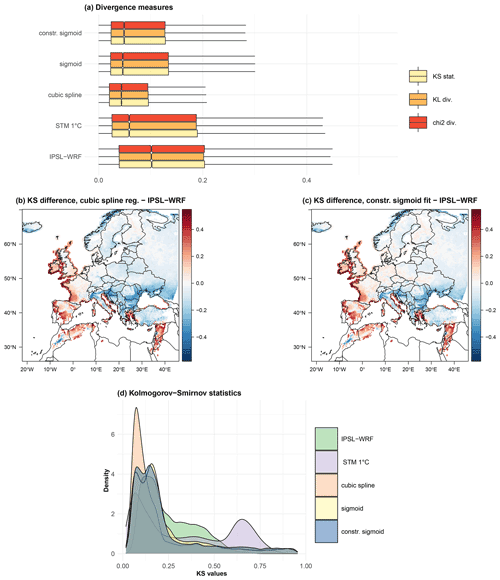

Figure 9Performance of the statistical methods as bias correction methods of snowfall in terms of statistical divergences. Divergence measures box plots for statistical methods and raw IPSL_WRF simulations (a). Maps of KS statistical differences between IPSL_WRF and spline regression (b) and constrained sigmoid fit (c). Comparison of the probability density function of the KS statistics associated with the four considered statistical methods and the uncorrected IPSL_WRF model (d).

We evaluate the performance of the STM, cubic spline regression, and the two versions of the sigmoid fit and compare it to the values produced by the IPSL_WRF model, using three measures of proximity between probability distributions of SF, as described in Sect. 4 (KS, KL, χ2). At each grid point, we estimate the three divergence measures between the empirical probability distribution of ERA5 and of each statistical method. We do not consider the segmented logit-linear regression, since results produced by such a method are almost identical to the ones obtained using spline regression, but require also the breakpoint analysis, which is a lengthy and computationally expensive step for large datasets, making it a less attractive candidate.

The results are summarized in Fig. 9a through the box plots of the values considering the entire domain. Outliers are excluded for greater graphical clarity. For each method, the three divergence measures assume similar values overall. All methods to approximate snowfall produce smaller values of the statistical divergences compared to the raw IPSL_WRF output. The largest dissimilarity is observed for the STM: as already mentioned, such a method can be expected to produce accurate snowfall climatologies, but the resulting SF distributions cannot be realistic, given the binary partition.

The spline regression appears to be the best method in terms of both median and variability, as it consistently displays the smallest interquartile range (IQR) for all the three measures. However, while χ2 and KL produce very homogeneous values over the domain (not shown), KS is more spatially differentiated and also has larger outlier values. Figure 9b and c show the spatial distribution of the difference between IPSL_WRF KS distance compared to cubic spline regression and constrained sigmoid fit, respectively. Both methods display a clear spatial coherence, showing that improvements in terms of KS statistics concern central and eastern Europe, especially below 45∘ latitude. Figure 9d shows the distributions of the KS distance for the IPSL_WRF and the four discussed methods. Once again, smallest values are observed for the cubic spline that appears to be the best-performing method when considering the domain as a whole. Almost no difference is found between the constrained and the unconstrained version of the sigmoid fit.

5.3.1 Regional extremes

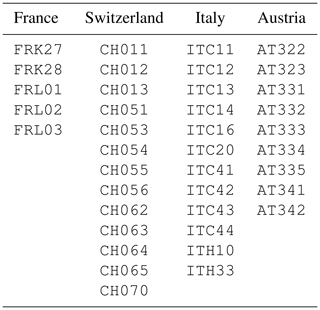

The results discussed so far show that the IPSL_WRF model is affected by a spatially inhomogeneous and sometimes important bias, and that the snowfall reconstruction based on the methods selected in Sect. 5.2 produces more realistic values on the majority of grid points. In particular, the greatest advantage seems to be in very snowy areas, such as the Alps and Norway, where post-correction biases are comparable to the rest of the domain, while those in the IPSL_WRF model are roughly up to an order of magnitude larger. Other areas, such as central Europe, are characterized by smaller biases, even in the raw climate model output and in the STM. In this paragraph, we focus our analysis over limited areas with high (the Alps and Norway) and low (France and Germany) bias. Here, we only show results for the constrained version of the sigmoid function. We define the Alps region based on Eurostat 2016 nomenclature of territorial units for statistics (NUTS 3), choosing provinces belonging to France, Switzerland, Italy, and Austria characterized by large values of total 1979–2005 snowfall and large differences between ERA5 and IPSL_WRF. In particular, we include the regions marked by the NUTS 3 codes listed in Table A1.

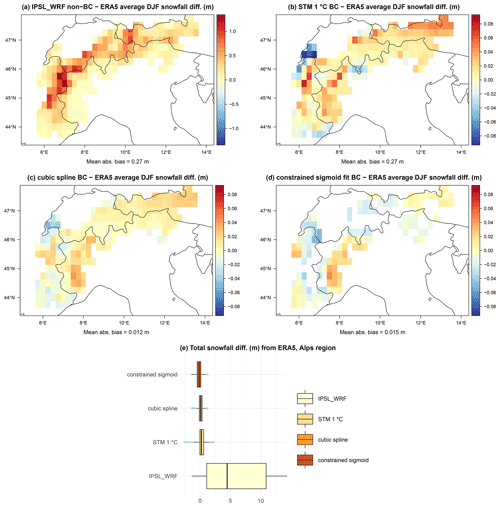

Figure 10Maps of average 1979–2005 DJF snow differences over the Alps region between raw IPSL_WRF (a), STM (b), cubic spline regression (c), sigmoid fit (d) bias correction, and ERA5. Corresponding summary box plots are shown in panel (e).

The IPSL_WRF simulation is characterized by a large positive bias over the Alps, with values up to ∼1 m difference in the average 1979–2005 DJF snowfall, as shown in Fig. 10a. Figure 10b–d clearly show the dramatic reduction in the bias for all the statistical methods, with values decreasing from ∼0.5–1 to ∼0.05 m especially in the most affected areas of the central–western Alps. As already mentioned, however, the sigmoid fit does not converge over several grid points over the Alps, making this method not suitable for snowfall reconstruction over this region.

Overall, the median bias of the STM and spline regression remains slightly positive, but the IQR of the differences with respect to ERA5 is reduced by around an order of magnitude, as evident from Fig. 10e. The overall best performance in terms of average DJF snowfall is shown by the spline regression, with both the smallest residual bias and variability.

Since comparing IPSL_WRF and its adjusted versions to ERA5 does not provide a one-on-one correspondence between snowfall events, it is not possible to compute correlation coefficients between reanalysis and statistically reconstructed snowfall time series at each grid point as in Fig. 4. Instead, we can study the correlation between the total 1979–2005 ERA5 snowfall and the total 1979–2005 snowfall simulated by IPSL_WRF and approximated with the STM, cubic spline regression, and sigmoid fit at each grid point.

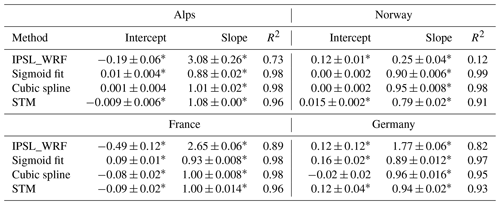

The upper left quadrant of Table 3 reports values of the intercept and slope of the least squares line plus the determination coefficient; the asterisks mark coefficients that are significantly different from 0 at the 5 % level. The slope estimate is accompanied by its 95 % confidence interval, obtained as ±2 s.e., where s.e. is the standard error.

In case of an ideal bias correction method, differences between the reference dataset and corrected model output would be a sequence of uncorrelated zero-mean Gaussian random variables. This implies a linear relationship with zero (nonsignificant) intercept, a significant slope close to 1 (i.e., with 1 included in the confidence interval), and an R2 as close to 1 as possible. The intercept of the IPSL_WRF is significant and negative, the slope is significantly different from 1, and a determination coefficient is R2=0.73. All the statistical methods produce a remarkable improvement in the parameters, with the cubic spline regression displaying the values closest to the ideal case. These results demonstrate a marked improvement of the long run snowfall statistics over the Alps obtained with any of the considered statistical approximations, compared to the uncorrected climate model snowfall.

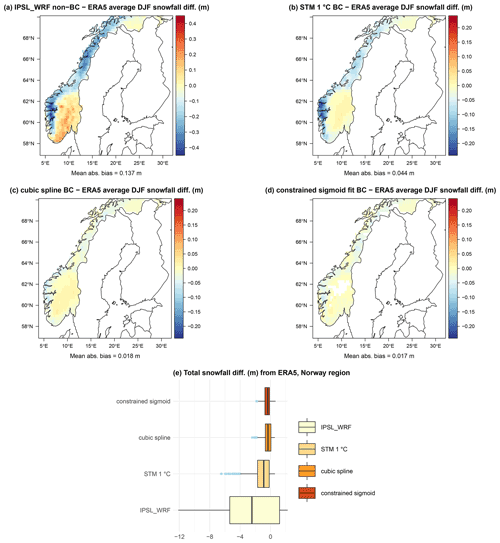

Figure 11Maps of average 1979–2005 DJF snow differences over Norway between raw IPSL_WRF (a), STM (b), cubic spline regression (c), sigmoid fit (d) bias correction, and ERA5. Corresponding summary box plots are shown in panel (e).

We repeat the same analysis for Norway, another area where DJF snowfall constitutes a large proportion of total DJF precipitation and the IPSL_WRF model shows large biases. As shown by Fig. 11a, IPSL_WRF presents a negative bias, up to −0.4 m on average for 1979–2005 DJF snowfall, on the entire western and northern sides of the country, and positive biases up to m on the southern areas, making snowfall over one of the most snowy areas in Europe heavily misrepresented. Again, Fig. 11b, c, and d show the reconstructed snowfall using STM, cubic spline regression, and constrained sigmoid fit, respectively. In this case, the bias pattern is still persistent after correction, with the exception of a passage from positive to negative values over the southern coast. The magnitude of the residual bias is reduced by a factor ranging from ∼2 for the STM to ∼5 for the spline regression and sigmoid fit, compared to IPSL_WRF. As for the Alps, the box plots of total snowfall over the country, shown in Fig. 11e, suggest that the best performance in terms of correction of average DJF snowfall is obtained using the cubic spline regression and by the sigmoid fit, without large differences between the two methods. However, while the mean absolute bias is similar for cubic splines and the sigmoid fit, the latter results in an area of non-convergence in a region characterized by large DJF snowfall totals. As for the Alps region, STM estimates are less biased compared to the raw IPSL_WRF output, even though its performance is less close to the one of the two other competing methods.

As shown in Table 3, the relationship between total DJF snowfall simulated by the IPSL_WRF model and ERA5 is characterized by poor correlation (R2=0.12) and regression parameters. Snowfall reconstruction via statistical methods produces, once again, a sizable improvement in snowfall estimation, with R2=0.91 for the STM and R2≥0.98 for the cubic splines and sigmoid fit.

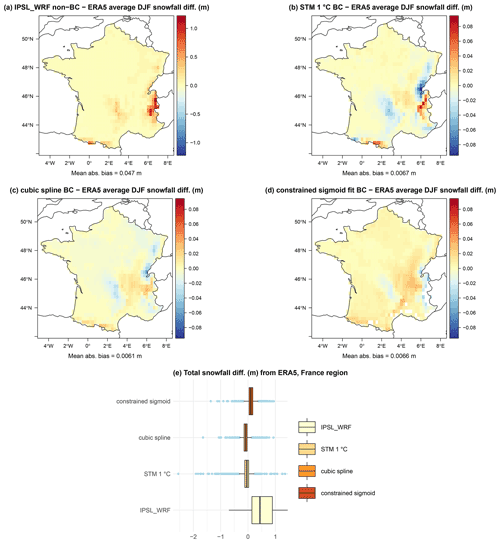

Figure 12Maps of average 1979–2005 DJF snow differences over France between raw IPSL_WRF (a), STM (b), cubic spline regression (c), sigmoid fit (d) bias correction, and ERA5. Corresponding summary box plots are shown in panel (e).

Figure 13Maps of average 1979–2005 DJF snow differences over Germany between raw IPSL_WRF (a), STM (b), cubic spline regression (c), sigmoid fit (d) bias correction, and ERA5. Corresponding summary box plots are shown in panel (e).

Both Norway and the Alps regions are characterized by mountain ranges subject to orographic forcing of moist air masses and by low DJF temperatures due to elevation (especially for the Alps) and latitude (Norway). Moreover, all methods show the lowest correlation between observed and reconstructed snowfall in the validation phase over these areas (see Fig. 4). We now repeat the same assessment for areas where snowfall is overall less abundant and driven by different mechanisms, and the statistical methods show higher correlations in the validation phase. Figure 12 shows results for France and Fig. 13 for Germany. Both countries are characterized by nonhomogeneous orography, continental areas, and coasts directly influenced by the Atlantic Ocean, the North Sea, and the Mediterranean Sea. In both cases, the IPSL_WRF bias is overall positive but smaller compared to the previously analyzed regions, with the largest values in the areas closest to the Alps region. In terms of average DJF snowfall (Figs. 12e and 13e), all three statistical models produce a visible improvement compared to the raw climate model, with some residual biases especially over the more elevated areas. Differences among models over these two areas appear to be negligible.

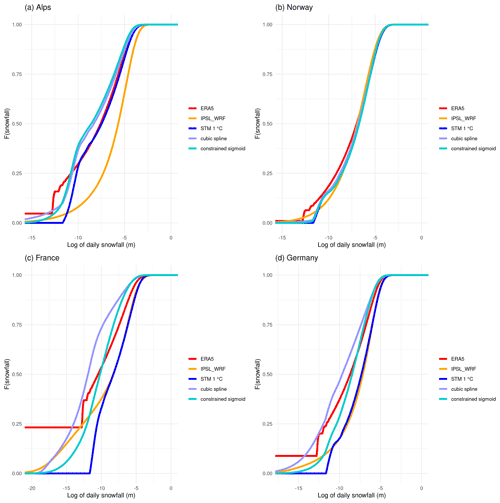

Figure 14Comparison between cumulative distribution function of daily snowfall in ERA5 reanalysis (red lines), raw IPSL_WRF model output (orange line), STM (blue line), cubic spline (purple line), and constrained sigmoid (turquoise line) bias correction for (a) the Alps, (b) Norway, (c) France, and (d) Germany. The distributions are shown in a natural logarithmic scale to magnify the differences.

As already mentioned, bias adjustment usually aims at correcting as much of the distribution of the observable as possible. To assess if and how well our methods work in this sense, we compare the empirical cumulative distribution function (ECDF) of daily snowfall, once again considering each region as homogeneous. Figure 14 shows the comparisons among the ECDFs with a logarithmic x axis to magnify small differences. In all cases, the IPSL_WRF distribution is visibly different from the reference. For France and Germany, the STM results in distributions very similar to those of the raw model, despite the small residual biases in snowfall totals, while it approximates the ERA5 distribution very well in the case of the Alps region. The two more complex methods produce a visible improvement in all cases, compared to the raw IPSL_WRF model.

In summary, we have shown that combining a breakpoint search algorithm and a flexible regression method – be it a segmented logit-linear or a cubic spline regression – allows for a reliable snowfall reconstruction in climate simulations. This method proves to be effective both in correcting large mean biases and in preserving the shape of the entire probability distribution of (daily) snowfall rather than only long run totals. This result is crucial for studying the characteristics of future snowfall in a wide range of environments, encompassing regions characterized by frequent and abundant snowfall in cold climates and temperate areas where occasional snowstorms and heavy wet-snow events can cause serious loss and damage.

We have presented four statistical methods to estimate the snowfall fraction of total DJF precipitation over Europe, provided that a reliable measure of near-surface temperature is available. This is a relevant problem in both hydrology and climatology, since an accurate estimation of snowfall is challenging in case of both observed or simulated precipitation.

In case of observational data, especially over large areas where a single weather station is not representative, snowfall is often unobserved due to difficulties in making its measurement an automated procedure. On the other hand, climate model outputs often include snowfall, but this is affected by a bias arising from the physical and mathematical approximations contained in the model scheme. For other variables such as temperature and total precipitation, we can rely on well-established and relatively simple univariate bias correction methods that can be applied pointwise in the case of gridded data. However, snowfall presents more challenges, since not only the magnitude but also the number of events is biased as a consequence of biases in the temperature. Thus, its correction would require conditioning on temperature and precipitation, and possibly including a stochastic generator of snowfall events to correct snowfall frequency, other than snowfall magnitude. Therefore, the availability of simple methods to reconstruct the snow fraction of total precipitation is a great advantage in contexts where much more complex and computationally costly procedures should be created and applied in order to obtain an accurate snowfall measurement.

The techniques applied in the existing literature mainly consist of a binary representation based on a threshold temperature, linear interpolations between two thresholds, and a binary representation outside of the inter-threshold interval or fitting parametric S-shaped functions with nonlinear least squares. The simple binary description is effective in its simplicity when the researcher is interested in particular in extreme events or long run total climatologies, but it cannot provide a reliable reproduction of the entire snowfall distribution. Moreover, the thresholds in the first two methods are often established simply by visual inspection of the plot of snow fraction against temperature, or analyzing the entire available dataset at once. However, in the case of gridded data over large areas, the optimal threshold values may vary, depending on the location.

The first considered method consists of a binary partition (STM), testing two possible thresholds at 1 and 2 ∘C. For our domain, the 1 ∘C proved to provide better results compared to the alternative.

The second method is a segmented linear regression on the logit of the snowfall fraction, informed about the location-specific optimal number of thresholds (between 0 and 2) via a breakpoint search algorithm.

As a more flexible alternative to segmented logit-linear regression, we introduced a nonlinear regression based on cubic splines, where the spline knots are taken as the deciles of the location-specific temperature distribution. This allows us to construct a flexible statistical model without requiring computationally intensive additional step such as the breakpoint search in the case of the segmented logit-linear regression.

Finally, we adopt a parametric nonlinear statistical model, in which the link between near-surface temperature and snow fraction is given by a hyperbolic tangent function, whose parameters are estimated via nonlinear least squares.

We used ERA5 reanalysis over Europe for the period 1979–2005 for validation, by estimating each statistical model at each grid point over a training set and comparing the performances in terms of prediction of out-of-sample values. In this validation phase, the STM provides much less accurate prediction compared to the more complex methods. The results obtained with the two regression models are very similar; however, the longer, computationally intense, and more complex procedure required to inform the segmented logit-linear regression makes it less advantageous compared to the spline regression. Finally, the constrained sigmoid fit produces results comparable overall to the spline regression over most grid points. However, it seems to be slightly less flexible than its competitors, as the fit can fail to converge over areas where DJF precipitation mainly falls as snow, such as Scandinavia and the Alps, or be negatively affected by outliers, as seen in the transition curve for Oslo. Based on our result, we conclude that this method could be superior to others for studies conducted over limited areas characterized by very smooth snow transitions. However, it does not seem to be adequate for studies over very large domains or when using already validated and published datasets that could still contain some noisy data, as is the case for snowfall in ERA5.

Results hold when using the 25 % coldest and warmest years as training and test sets, respectively. This observation is encouraging in view of the application of the analyzed techniques to study snowfall under different climate projection scenarios, provided that the performance can be reproduced when considering climate simulations instead of reanalysis.

To tackle this question, we consider the historical period of 1979–2005 in the IPSL_WRF high-resolution climate simulation model, for which bias-corrected near-surface temperature and total precipitation are available, while snowfall is not adjusted, showing very large biases with respect to ERA5, especially over areas characterized by frequent and abundant snowfall. We find that the point-specific estimates obtained in the model selection phase can be applied to this dataset to obtain snowfall estimates much closer to reanalysis compared to the raw IPSL_WRF data. We exclude the segmented logit-linear regression, since its performance did not show differences compared to the spline regression, making its higher complexity not justified.

We validate our results by both considering the entire domain and specific regions. We find that, in general, the reconstructed snowfall improves remarkably in terms of long run statistics and similarly between probability distributions with all methods, proving that they can be used in place of more complex multivariate bias correction schemes. However, it is clear that the best method depends on both the geographical area and the objective. For example, over the Alps, the best performance is given by the spline regression both in terms of average DJF snowfall bias and distribution of daily snowfall events but without dramatic differences among the three methods; over Norway, another region characterized by large DJF snowfall, the STM performs discernibly worse than the other two methods in terms of average snowfall, but it produces the best bias correction of the daily snowfall distribution, even though with no dramatic differences compared to the spline regression and sigmoid fit. For areas such as France and Germany, the STM is competitive against more complex methods in terms of both snowfall averages and daily snowfall distribution.

Overall, among the tested methods, we discard the segmented logit-linear regression, which requires a time-consuming step to perform breakpoint analysis over the grid and without performing better than its competitors. We remark that, to reproduce average DJF snowfall, the simple STM can be applied with success; however, unlike what is reported in Faranda (2020), its performance is sensitive to the choice of the threshold temperature, as we find that a value of 1 ∘C improves snowfall reconstruction compared to 2 ∘C, in line with Jennings et al. (2018). In some areas, such as Germany, the sigmoid fit produces the best results, but it can be a poor choice to approximate the snowfall fraction over areas where most precipitation falls as snow in the considered season. Overall, we find that the cubic spline regression with knots given by temperature quantiles (deciles in our case) has the best tradeoff between feasibility and accuracy of results. However, for studies over limited areas, it could be worth comparing different specifications (e.g., STM, spline regression, and a sigmoid fit) on reanalysis or observations, to establish which techniques produce the most accurate results.

Limitations

We also clarify some of the limitations of our analysis. The nature of climate datasets makes multiple comparisons among methods and BC techniques very demanding in terms of data storage and computational time. For this reason, we limited our analysis to one reanalysis dataset (ERA5), one marginal bias correction technique (CDF-t), one climate projection model (IPSL_WRF), and to the DJF season.

We do not consider the choice of ERA5 problematic with respect to other gridded datasets that could be observational (e.g., E-OBSv20) or other reanalysis (e.g., NCEP/NCAR). While the actual values could change between datasets, we do not foresee this directly affecting the performance of the methodology we presented in terms of improvement of the raw simulations with respect the chosen reference dataset.

On the other hand, the choice of the BC may influence the outcome of our statistical modeling procedure. The CDF-t is applied marginally to each variable, so that there is no guarantee that the inter-variable correlations are correctly reproduced in the bias-corrected climate model output. Indeed, Meyer et al. (2019) showed that applying multivariate as opposed to univariate BC produces significant changes in estimated snow accumulation, stressing the importance of modeling the interdependence between precipitation and air temperature in hydrological studies focused on snowy areas. The choice of the BC, in general, should be tuned on the tradeoff between complexity and need for controlling specific feature, in this case the inter-variable correlation. In our case, we considered a climate dataset prepared in the context of the CORDEX-Adjust project, which is made available already adjusted with respect to ERA5 using marginal CDF-t. Our results show an improvement in snowfall representation even relying on marginal BC; however, we stress that the methodology should be validated again if used on datasets prepared with different BC techniques to assess whether this difference affects the predicting performance of the statistical method.

On the same note, we remark that prediction accuracy may vary across different climate models, due to the different physical approximations and parameterizations, which are likely to affect the relationship between near-surface temperature and precipitation. Due to these differences, even other RCMs from the EURO-CORDEX project may exhibit variability in the performance of the snowfall reconstruction. This holds true for all statistical methods cited in Sect. 1, as it is rarely the case that snowfall reconstruction techniques are tested over an ensemble of different climate models. Once more, we stress the importance of assessing the performance of the chosen methodology to approximate snow (or compare several of incidences thereof) by validating it on the historical period of the available climate models in reference to the available reanalysis/observation dataset.

We have presented four statistical methods to estimate the snowfall fraction of total precipitation, provided that a reliable measure of near-surface temperature is available. This is a relevant problem in both hydrology and climatology, since an accurate estimation of snowfall is challenging in the case of both observed or simulated precipitation.

There are two methods, namely the segmented logit-linear and the spline regressions, that are an extension of traditional precipitation-phase partitioning methods based on estimating the snowfall fraction of total precipitation on the base of one or multiple threshold temperatures. For the segmented logit-linear regression, we estimate the number of such thresholds by means of a breakpoint search algorithm, while splines do not require the specification of physically meaningful thresholds, and quantiles of the temperature can be assumed as knots. The other two methods, i.e., STM and sigmoid fit, are used in studies already present in the literature, even though not with the scope of eliminating biases in climate simulations.

The two regression models perform the best in terms of prediction error and correlation between real and reconstructed values in a train–test sets validation framework, based on the ERA5 reanalysis dataset. These two methods also show robustness with respect to possible non-stationarity, when choosing the 25 % coldest years as training set and the 25 % warmest years for testing. In this context, the sigmoid fit also produces good results, but it could fail in case the snow fraction transition curve is not well represented by an S-shaped function.

When applied to reconstructing snowfall in a regional circulation climate model, all techniques produce results with a markedly reduced bias respect to ERA5, when compared to raw climate model simulations.

We conclude that statistical methods based on spline regression and sigmoid fit, informed by bias-corrected temperature and precipitation, are capable of providing a reliable reconstruction of snowfall that can replace more complex bias correction techniques, with better performances than similar methods based on parametric assumptions or binary phase separation. For limited areas, and depending on the task, simpler single threshold methods can perform equally well and could be advantageous in case fast or computationally light procedures are needed.

A1 Breakpoint analysis and segmented logit-linear regression

In order to estimate the temperature thresholds, we rely on breakpoint analysis. This method was originally developed by Bai (1994) to detect and date a structural change in a linear time series model and later extended to the case of a time series with multiple structural breaks (Bai and Perron, 1998). The technique was further generalized by Bai and Perron (2003) to the simultaneous estimation of multiple breakpoints in multivariate regression. In the following, we rely on this formulation, implemented by Zeileis et al. (2003) in the R package strucchange (Kleiber et al., 2002).

The method can be summarized as follows. Let us first consider the case of a univariate response variable yi and a k×1 vector of explanatory variables xi, which evolve over time and are linked by a linear relationship, as follows:

where βi is a k×1 vector of regression coefficients, and εi a random term. The first component of x is the unit, so that the first component of β is the statistical model intercept. The null hypothesis when testing for structural change is that the slope βi is constant over time, i.e.,