the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Interpretable seasonal multisite hidden Markov model for stochastic rain generation in France

Emmanuel Gobet

Sylvie Parey

We present a lightweight stochastic weather generator (SWG) based on a multisite hidden Markov model (HMM) trained on a large area with French weather station data. Our model captures spatiotemporal precipitation patterns with a strong emphasis on seasonality and the accurate reproduction of dry and wet spell distributions. The hidden states serve as interpretable large-scale weather regimes, learned directly from the data without requiring exogenous inputs. Compared to existing approaches, it offers a robust balance between interpretability and performance, particularly for extremes. The model architecture enables seamless integration of additional weather variables. Finally, we demonstrate its application to future climate scenarios, highlighting how parameter evolution and extreme event distributions can be analyzed in a changing climate.

- Article

(12658 KB) - Full-text XML

- BibTeX

- EndNote

1.1 Context

The current context of climate change necessitates a careful analysis of industrial resilience in future climate conditions to anticipate adaptation needs. This includes estimating extreme hydrometeorological conditions, such as the frequency of long-lasting dry spells, which are critical for hydropower and nuclear generation. Parliamentary missions in France (Christophe and Pompili, 2018) have highlighted the need to quantify hydro-stress impacts on nuclear power generation. Similarly, understanding future hydrometeorological conditions is essential for farmers to develop robust agricultural strategies (see Pascual et al., 2017; Zhao et al., 2017; Parent et al., 2018, and references therein).

Rainfall can trigger natural hazards with diverse spatiotemporal characteristics, ranging from short-duration, localized intense showers to prolonged meteorological droughts affecting vast regions. As a result, precipitation modeling must be adapted to the specific hazard being addressed. Global and regional climate models can simulate the climate system and project its evolution under different forcing scenarios. However, they remain computationally expensive, limiting the number of simulations that can be performed. In practice, climate projections are made available in public repositories, such as the French national project DRIAS (Soubeyroux et al., 2021), which provides around 30 projections (see Sect. 8 for details). Yet, for accurate risk assessments, additional scenarios may be needed, leading to challenges in data augmentation and scenario resampling. Another limitation of climate models is their inability to fully capture local extremes, despite advancements in spatial resolution and process modeling (Luu et al., 2022). Consequently, stochastic weather generators remain widely used in impact studies. The recent IPCC Working Group 1 report of the 6th assessment (Arias et al., 2021) emphasizes the importance of such tools, stating that “Methodologies such as statistical downscaling, bias adjustment, and weather generators are beneficial as an interface between climate model projections and impact modeling and for deriving user-relevant indicators.” Unlike climate models, which represent the physical mechanisms governing climate evolution, stochastic weather generators are calibrated to reproduce the statistical properties of climate variables, including distributions, spatial and temporal correlations, and inter-variable dependencies in multivariate models.

Generating statistically coherent weather series in time and space is a challenging problem. Mathematically, these series form multivariate time-dependent data that are far from being independent and identically distributed. In simple terms, today's weather is strongly influenced by past conditions and spatial correlations with surrounding locations. Additionally, weather patterns evolve throughout the year and under climate change, making it essential to accurately reproduce both typical weather conditions and extreme events such as heavy precipitation and intense heatwaves.

For industries operating spatially scattered installations, a key concern is the simultaneous exposure of multiple facilities to the same extreme event. In electricity generation, prolonged, spatially extensive droughts can complicate grid management, making it crucial to assess their frequency to anticipate appropriate adaptation measures (International Energy Agency, 2022, e.g., Chap. 3 – Nuclear Power). Large-scale weather regimes can also significantly impact renewable energy production (van der Wiel et al., 2019). However, estimating the occurrence and intensity of such situations is not straightforward. Historical observations provide only a single realization among many possible trajectories influenced by climate variability. Climate models can expand the range of possible events but often fail to produce enough extreme cases for robust statistical analysis. For example, Lang and Poschlod (2024, Sect. 4.1) discuss an ensemble of 50 climate model simulations used to estimate return periods of heavy rainfall, while Fischer et al. (2023) show that an ensemble of 30 climate model simulations over 31 years each could not reproduce heatwaves of the same magnitude as the 2021 Pacific Northwest event. To address this limitation, they propose ensemble boosting methods to enrich simulations of extreme heatwaves. Stochastic weather generators (SWGs) offer a way to overcome this limitation by increasing the sample size, enabling better extreme event statistics at a manageable computational cost.

Beyond hazard modeling, stochastic weather generators are essential tools for climate stress testing (Robertson et al., 2007; Manzano and Ines, 2020; Ranger et al., 2022). By generating realistic large ensembles of weather simulations under a given climate scenario, they help decision-makers anticipate climate variability and implement proactive adaptation measures. Given the increasing impact of climate change, leveraging these models is crucial for building resilience and ensuring sustainability.

The purpose of this paper is to develop an interpretable parametric model that efficiently simulates spatially coherent rainfall patterns – both occurrences and amounts – across France, incorporating self-taught large-scale weather patterns. Unlike pure generative models based on neural networks (Goodfellow et al., 2014), the stochastic generator developed in this study benefits from easily interpretable parameters, enabling the incorporation of climate change factors (see Sect. 8.3). Recent studies (Miloshevich et al., 2024) compare the use of stochastic weather generators and deep learning models for extreme heatwave sampling, highlighting the complementary nature of these approaches. Other studies have shown that classical generative models are not adapted to learn heavy-tailed distributions (like rainfall), requiring specialized architecture dedicated to extremes (Allouche et al., 2022). Finally, enforcing physical constraints within such models remains a challenge, which is critical for generating long-term realistic weather simulations (Dueben and Bauer, 2018).

1.2 Background literature

Many stochastic weather generators are devoted to the generation of precipitation time series, as precipitation is a crucial variable for many impact studies in agriculture or hydrology. For reviews, see Wilks and Wilby (1999), Chen and Brissette (2014), Ailliot et al. (2015a), and Nguyen et al. (2023). Stochastic weather generator (SWG) development dates back to the 1980s, with the model proposed by Richardson (1981) to generate long samples of precipitation, minimum and maximum temperature, and solar radiation.

They can have different spatial scales, e.g., single-site models (Richardson, 1981), multisite models for closely located stations (Benoit et al., 2018), gridded-resolution models (Wilks, 2009; Dawkins et al., 2022), or models for widely separated stations (≳100 km) (Zucchini and Guttorp, 1991; Robertson et al., 2004). The temporal resolution can also vary, typically from sub-hourly (Cowpertwait et al., 2007) to hourly (Stoner and Economou, 2020) or daily rainfall amounts. Multisite daily stochastic generators will be the main focus of this work. A class of models focuses on nonparametric approaches, typically using resampling methods (e.g., Boé and Terray, 2008), which mix parametric and resampling techniques. The main drawback of these methods is that they cannot produce samples outside the observed distribution, limiting their usefulness for extreme value analysis. In such cases, parametric models are typically preferred.

The first important modeling choice is whether rain occurrence and rain amount should be generated separately. To simulate both rain occurrence and amounts simultaneously, one typically uses latent Gaussian variables, e.g., censored Gaussian latent variables (Bardossy and Plate, 1992; Ailliot et al., 2009; Baxevani and Lennartsson, 2015) and complex covariance structures (Flecher et al., 2010; Benoit et al., 2018; Bennett et al., 2018). These approaches conveniently model spatiotemporal dependencies within a common latent space using a covariance structure. They are highly flexible, allowing, for example, the easy inclusion of past dependence or other weather variables such as temperature. However, these approaches assume an underlying normal dependence, which is not always satisfied. Their computational complexity makes them very hard to train. Moreover, they tend to misrepresent dry and wet spells (e.g., Bennett et al., 2018, Fig. 4, or Baxevani and Lennartsson, 2015, Figs. 7 and 19), as they treat spatiotemporal dependence in rain occurrence and rain amounts the same way. One can argue that these are two distinct processes. In that case, modeling rain occurrences separately has typically been done using first-order Markov chains (Richardson, 1981), which can be easily extended to higher orders to better reproduce dry and wet spells (Srikanthan and Pegram, 2009). To model subsequent rain amounts, parametric distributions with light or heavy tails are used (see Chen and Brissette, 2014, for a comparison). In this second approach, addressing spatial dependence becomes challenging since it must be handled separately for rain amount and occurrence. To this end, a class of models referred to in this paper as WGEN models was proposed in Wilks (1998). It introduces a latent Gaussian copula to model correlations between rain occurrences at different sites. While this approach effectively reproduces pairwise correlations, it treats time and space dependence separately and, as we will show in Sect. 5.3, fails to capture large-scale, temporally persistent dry states.

Another option is to include spatial dependence using meteorologically defined weather types (e.g., dry, wet, or atmospheric circulation patterns), also referred to as weather regimes or circulation patterns. In this case, weather regimes are a finite set of large-scale patterns that characterize the weather of a given day. They can either be predefined and incorporated into the model as exogenous variables or inferred as latent variables; see Vaittinada Ayar et al. (2016) and Gutiérrez et al. (2019) for comparison of different approaches. For example, Vrac et al. (2007) identified weather types a priori through the classification of either precipitation data or exogenous atmospheric variables and trained a Markov-like precipitation model conditional on these inputs. Nguyen et al. (2024) applied a similar approach using a multivariate autoregressive model and a Gaussian latent model for rain occurrence and precipitation. Known dependence can also be incorporated using the generalized linear model (GLM) framework to make model parameters dependent on covariates such as previous-day dependence, known weather regimes, month of the year, and so on (see Yang et al., 2005; Chandler, 2020). See Holsclaw et al. (2016), Verdin et al. (2019), and Stoner and Economou (2020) for Bayesian versions of these GLM approaches. These approaches have two main drawbacks. First, they require selecting the relevant weather types or exogenous variables, along with the stochastic properties of precipitation conditional on weather types. See Philipp et al. (2016), Beck et al. (2016), and Huth et al. (2016) for comparisons and discussions on the impact of the choice of weather regimes based on synoptic variables. Additionally, Najibi et al. (2021) study the quality of a weather generator conditioned on different predefined weather patterns that were obtained by different methods. Second, the exogenous variables or weather regimes need to be specified by the user or accurately modeled in order to utilize the weather generator, which may pose limitations in certain applications.

To circumvent these difficulties, hidden Markov models (HMMs) introduce weather types as latent variables (Zucchini and Guttorp, 1991; Ailliot et al., 2009; Sansom and Thomson, 2010), which are directly inferred from the station and variable of interest without requiring additional data. This approach has the advantage of identifying weather regimes specifically adjusted to the weather characteristics of the area of interest (Najibi et al., 2021). The weather regimes are typically modeled using a latent Markov chain, and the distribution of the observations is conditioned on these latent states.

We may recall that it is not surprising from a probabilistic point of view that using latent variables to model complex dependencies might simplify analysis; see, for example, Kim et al. (2019) and Yamanishi (2023) for machine and deep learning reviews or Ghassempour et al. (2014) for time series clustering. In some very specific settings, it is even possible to prove that general exchangeable random variables can be realized as a mixture of product distributions (for some latent distribution) thanks to De Finetti's theorem (Diaconis and Freedman, 1980). In our setting, the exchangeability of the rain variables is not satisfied (e.g., rain occurrence probabilities differ at different weather stations); however, this case is still inspiring for modeling dependencies.

Coming back to multisite rain occurrence models, spatial HMMs have been proposed by Zucchini and Guttorp (1991), with the latent variable identified during inference shown to yield meaningful large-scale patterns (Robertson et al., 2004). In these papers, the main limitation is the conditional independence assumption, which states that multisite rain occurrences are independent given the weather states. This makes these generators suitable for widely separated stations where the assumption holds. In this paper, we adopt and verify this hypothesis a posteriori.

Other studies have incorporated exogenous weather variables into spatial HMMs to introduce more spatial correlations beyond those produced by the conditional independence assumption while also making them sensitive to other spatial phenomena. This is referred to in the literature as nonhomogeneous HMM (Hughes and Guttorp, 1994a, b; Hughes et al., 1999; Bellone et al., 2000; Greene et al., 2011) and used for statistical downscaling. As previously mentioned, selecting these additional dependencies is challenging and thus might not always be beneficial, as shown in Hughes and Guttorp (1994b, Table 4), where the authors, using an HMM-based model, compare a version with exogenous variables and one without. The conclusion is that the best model is the one without external forcing.

See Hughes and Guttorp (1994a) for a comparison of different model choices. In Kirshner (2005), the author provides an overview and tests different options for multivariate distributions, ranging from conditional independence to complex dependence structures, including tree structures.

Models incorporating spatial HMM conditional independence with rain amounts directly using exogenous variables have been explored (Bellone et al., 2000; Neykov et al., 2012; Holsclaw et al., 2016). In Kroiz et al. (2020), the model is first fitted under the conditional independence assumption without external variables, and then a Gaussian copula is applied conditional on the weather states to correlate rain amounts.

Finally, most approaches described above assume constant parameters over a period of interest, e.g., a month or a season. Therefore, time nonhomogeneity in the HMM, i.e., a transition matrix that depends on time, has also been proposed, for instance, to introduce wind diurnal cycles (Ailliot and Monbet, 2012; Ailliot et al., 2015b, or Touron, 2019a) for multivariate (temperature and precipitation) single-site HMM.

1.3 Our contribution

We introduce the seasonal hierarchical hidden Markov model (SHHMM), a lightweight seasonal model based on a hidden Markov model (HMM) for generating multisite and temporally realistic weather series, specifically precipitation. As in Touron (2019b), our model is fully time-nonhomogeneous, with parameters varying periodically throughout the year. The first layer consists of an autonomous spatial HMM for rain occurrences, similar to Zucchini and Guttorp (1991) and Robertson et al. (2004), while the second layer models multisite seasonal rainfall amounts conditioned on the learned hidden states.

1.3.1 Capturing large-scale dependencies

Our approach decomposes distributions using conditional independence with respect to hidden states, effectively capturing complex spatiotemporal dependencies without requiring external synoptic data. This ensures that large-scale weather regimes emerge naturally from the data. Moreover, they are shown to be robust across different station selections. To help with the station selection, we propose a simple metric to evaluate conditional independence. Rather than modeling rain amounts directly within the HMM, we focus on discrete rain occurrences. While previous attempts (Kroiz et al., 2020; Holsclaw et al., 2016) struggled to capture meaningful spatial correlations when including rain amounts in the hidden states, our results show that the inferred states remain interpretable and relevant for both rain occurrences and related meteorological variables such as mean sea level pressure. Unlike approaches that introduce additional spatial correlation structures (Hughes and Guttorp, 1994b; Kirshner et al., 2004), our model enforces conditional independence, ensuring that spatial dependencies are fully learned by the hidden states. This prevents ambiguity in model identification, where correlations could otherwise be absorbed by multiple components. Section 2.6 argues for the relevance of learning weather regimes with rain occurrences and conditional independence. In Sect. 2.5, the statistical identifiability of our model is discussed, showing in particular that a minimal number of stations is needed to be identifiable. Lastly, we propose a simple heuristic to initialize the SHHMM in the expectation maximization inference algorithm, which is known to be prone to local maxima (Cappé et al., 2005).

1.3.2 Improved temporal persistence and rainfall representation

To better reproduce wet and dry spell persistence, we introduce an additional autoregressive (local memory) Markov dependence (Cappé et al., 2005; Kirshner, 2005) to improve the simulation of spatiotemporal statistics. After generating rain occurrences, a Gaussian copula is used to conditionally add rain amounts, yielding significantly improved results over Kroiz et al. (2020). Our work contributes to the development of multisite SWGs for rainfall amounts, based on self-taught weather regimes, while explicitly decomposing the rain occurrence and amount processes.

1.3.3 Validation, comparison, and applications

We extensively validate the model's ability to reproduce key hydrometeorological statistics, including dry spell distributions and extreme rainfall accumulations. The model is compared with WGEN-type models (Wilks, 1998; Srikanthan and Pegram, 2009; Evin et al., 2018), which rely spatially on latent Gaussian structures and high-order Markov models locally. We show that our approach is more scalable in terms of complexity and better captures large-scale dry spells.

We also illustrate its usefulness in two climate-related applications: (i) estimating climate variability through multiple trajectory sampling, thereby showing how this can be used to compare climate models (used in IPCC reports) more accurately than with a single historical trajectory, and (ii) training our model on climate change scenarios and analyzing the evolution in terms of parameters and extremes.

Compared to existing multisite HMMs, our model uniquely combines local memory, seasonal parameter variation at low computational cost, and interpretable conditional rainfall generation. These properties make it suitable for studying large-scale risks such as prolonged droughts and extreme precipitation events, relevant in many applications.

Note that this multisite model generates weather data only at the training sites and hence cannot produce high-resolution fields. Nevertheless, multisite simulation remains highly useful in many operational contexts. For instance, in the energy sector, a critical question is the likelihood of prolonged dry spells affecting a large portion of the territory simultaneously. Such events can stress multiple power plants at once – particularly nuclear plants whose cooling systems depend on river flows, which are impacted by large-scale droughts. In this context, estimating the frequency of co-occurring dry episodes across regions is more relevant than reproducing detailed spatial variability. See Sect. 9 for a more detailed discussion on the perspective of this work. Similar challenges arise in large-scale agricultural production planning.

1.3.4 Software

The model and its code are available in the Julia package StochasticWeatherGenerators.jl (Métivier, 2024). It contains a reproducible step-by-step tutorial in its documentation describing all the data loading, training process, and simulations of the model described in this paper. Most figures in this paper can be exactly reproduced using the tutorial.

1.4 Organization of the paper

In Sect. 2, we describe the construction of the SHHMM. We explain in Sect. 3 the procedure to infer and select the model. Section 4 is entirely dedicated to the interpretation of the model parameters; in particular, the trained hidden states are interpreted as weather regimes for France and will be compared to other well-known weather regimes such as the North Atlantic Oscillation (NAO). In Sect. 5 we show simulation results for the spatiotemporal rain occurrence sequences with a special focus on extreme dry/wet sequences; we also compare our model to a WGEN-like model (Wilks, 1998). The actual rain amounts are then added on top of the previous model in Sect. 6 and tested in simulations in Sect. 7. In Sect. 8, we train our model with data from climate models on a reference historical period and on future climate change scenarios and discuss the results.

1.5 Notations used in the paper

For a positive integer M, we set . If Θ is a finite set, denotes its cardinality. We make the distinction between t for a day and n for a date; see Sect. 2.2. The number of days is T=366.

In this section, we introduce the statistical models considered in this work. The underlying mathematical framework is based on hidden Markov models (HMMs), which we develop and adapt with a focus on their application to stochastic weather generation.

2.1 Data

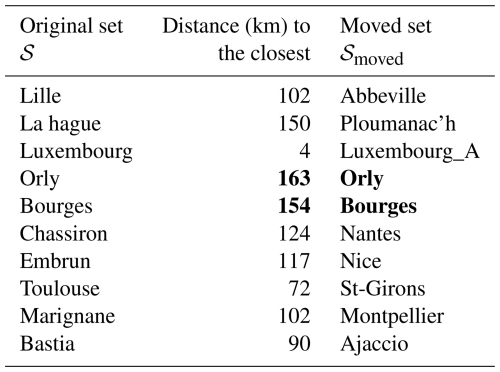

Daily rainfall observation time series are extracted from the European Climate Assessment & Dataset (ECA&D) (Klein Tank, 2002). We focus only on stations in France and close by. Among the available ECA&D weather stations in France and Luxembourg, 66 stations have 100 % valid data from 1 January 1956 to 31 December 2019, i.e., a 64-year range and, 23 376 data rows. We select S=10 of these stations in all of France (and Luxembourg): these weather stations are indexed with .

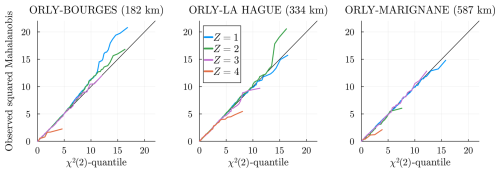

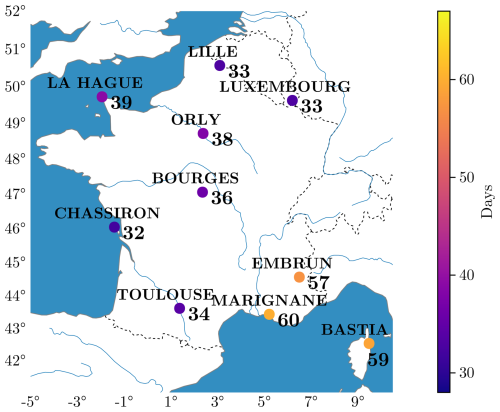

This weather generator scales to the size of France and aims to capture large-scale, interpretable weather patterns. Hence, we select the stations to be as representative as possible of French weather. As explained in Sect. 2.2, where the conditional independence hypothesis is presented, the major limitation of the model is its requirement for some degree of independence between stations. In principle, we should define a criterion to optimize the selection of S=10 stations among the 66 available. Even with a simple approach, such as maximizing the mean distance between station pairs, the problem is computationally intractable, as there are possibilities. A more relevant yet even costlier criterion is the MSECI in Eq. (19) that is the mean square error (MSE) between observed and simulated rain occurrence correlations. To select 𝒮, we start with a reasonable initial configuration and iteratively perturb it by changing a few stations, retaining the set with the minimum MSECI. In Appendix C, we show that replacing 8 out of the 10 stations in 𝒮 with nearby ones does not affect the model's interpretation in terms of weather patterns. We show in Fig. 1 all the selected stations; in addition, we report in the heatmap scale the historical maximum of consecutive days without rain – dry spell – at each location. One of the goals of our modeling is to reproduce similar records. In Sect. 8, we will investigate how our model (and its parameters) evolves when historical data are replaced by future projection data according to some Representative Concentration Pathway (RCP) scenarios.

Figure 1The 10 selected stations with their respective dry spell historical records over the period 1956–2019 (length in number of days).

The N=23 376 consecutive weather observations are labeled with . The multisite rain amount (MRA for short) is calculated as

for date n, where is the daily rainfall amount at site s. Note that rainfall is measured in millimeters (mm) of depth typically using a rain gauge, which physically corresponds to a volume per unit area – that is, 1 mm of rainfall equals 1 L m−2. It is the RR variable in the ECA dataset. Similarly, the multisite rain occurrence (MRO for short) is

where each , where dry means no rain and wet means nonzero rain, i.e., of daily cumulated rain.

In Sect. 4.1.2, to connect the weather patterns inferred by the model with physically meaningful atmospheric patterns, sea level pressure reanalysis data from the ERA5 reanalysis (Hersbach et al., 2020) are used. ERA5 is the latest climatic reanalysis produced by the ECMWF (European Centre for Medium-Range Weather Forecasts), providing hourly time series for various atmospheric, oceanic, and land surface parameters over the historical period from 1940 onward. It utilizes a 4D-Var data assimilation process to produce data on a 0.25° spatial resolution grid and is freely available on the Copernicus Climate Change Climate Data Store.

Lastly, in Sect. 8, two climate projections provided by the French climate service DRIAS (Soubeyroux et al., 2021), operated by Météo-France and Institut Pierre-Simon Laplace, are used. Developed to provide the best climate change information at the French national level for practitioners, DRIAS offers projections based on the Euro-CORDEX regionalization initiative, further statistically downscaled over France at an 8km resolution. A total of 42 simulations are available: 12 for the historical period (1951–2005) and 30 for the future (2006–2100), with 12 using the RCP8.5 scenario, 10 using RCP4.5, and 8 using RCP2.6. Among this set of projections, only two are used here, covering the historical period and the RCP8.5 scenario:

-

CNRM-ALADIN63, regionalizing the CNRM-CM5 global climate model with the ALADIN63 regional climate model

-

IPSL-WRF381P, regionalizing the IPSL-CM5A-MR global climate model with the Weather Research and Forecasting (WRF) regional climate model

The next subsections are devoted to the design of the model for the evolution of the MRO. The actual nonzero rain amount will be added on top of the model after it is trained in Sect. 6. Our approach relies on a hidden Markov model: generally speaking, it is made of a hidden component (that should be inferred) and of an observed one (here the MRO). All processes are discrete-time processes. See Cappé et al. (2005) for a general account about hidden Markov models.

2.2 Seasonal hidden Markov model (SHMM), model 𝒞0

For the sake of clarity, we start with a simplified model, which will be extended hereafter. See Zucchini and MacDonald (2009) for an introduction to hidden Markov models for time series. Consider first the hidden component Z, common to all stations s∈𝒮: it can take discrete values in that will be later interpreted as climate states for the region of interest, here France. We will thus refer to this variable as a weather regime (WR), as often done in the literature (e.g., van der Wiel et al., 2019). Note that other equivalent names also exist in the literature, such as weather types, weather patterns, or atmospheric circulation patterns.

The time evolution of follows a nonhomogeneous Markov chain on the state space 𝒦, with initial distribution , i.e., , and transition matrix for n≥1,

To fit the climate context, we assume that the transition matrix Qn is a T-periodic function of n with T=366, i.e., ; we will thus refer to the Markov chain as a seasonal Markov chain. In that case, we will distinguish between the label day of the year and the label full date n used to denote the position in the sequence. Each n corresponds to one t, but for each t there are as many date n∈𝒟 variables as the number of periods in the sequence: the matrices Q depend on time only through the day t. If T was equal to 1, this SHMM would be a regular homogeneous HMM, i.e., a constant matrix for all n∈𝒟 values. Next, we design the model for the time evolution of the MRO Y. The intuition behind the choice of well-spread stations is that local weather variables Y, conditional on weather regimes Z, are independent. In addition, we assume that the conditional distribution of Y(n) does not depend on the past of Y and is also periodic. All is summarized in the following assumption.

- (H-𝒞0)

-

Z evolves as a seasonal Markov chain with period T=366. Conditional on the process , the spatial components are independent and, furthermore, the conditional distribution of each only depends on Z(n). This is a Bernoulli distribution describing the probability of rain at a station s and date n, conditional on Z(n)=k: it is denoted as (called emission distribution in the HMM literature) and assumed to T-periodic, i.e., , and thus represented as

for some parameters .

The above model for is referred1 to as 𝒞0 and called a seasonal hidden Markov model (SHMM), with period T, initial distribution , transition matrix Qt, and distributions . This SHMM terminology is borrowed from Touron (2019a).

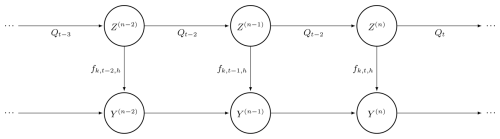

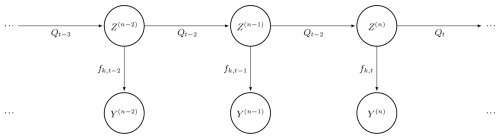

The SHMM chain is illustrated in Fig. 2.

Figure 2A seasonal hidden Markov process , where Z represents the hidden variables (weather regimes), Y the observed multisite rain occurrence (MRO), Q the transition matrix, and f the distribution of the observations.

A few remarks before going further can be found below.

-

This model accounts for leap years: for instance, the date corresponds to 28 February 1957, i.e., to the day t=59, while the next date n=426, 1 March 1957, is the day t=61. All 29 February dates are labeled with t=60. With this convention, the estimation of parameters for t=60 will be performed with 3 times fewer data than for other dates; nevertheless, it will have a quite minor impact on the procedure because of the time smoothing of parameters discussed in Sect. 2.4.

-

The annual periodicity of the distributions Qt and is questionable. On the one hand, for obvious reasons of statistical inference, it is not possible to try to estimate as many distributions (parameterized by n∈𝒟) as there are data available, which leads to the reasonable assumption of annual stationarity as in Touron (2019b). On the other hand, annual stationarity is probably not accurate considering climate change. In our methodology, the calibrated parameters should be understood as valid over the data horizon used. We will see in Sect. 8 that shifting the data period into the future (using climate projection under different RCPs) will cause some parameters to evolve. Let us mention some tests in Touron (2019b, Chap. V) showing that the effect of climate change on precipitation is not easily identifiable (unlike for temperatures), supporting the stationarity hypothesis of our model. Including nonstationary effects with spatial HMM would require modifying the model to allow exogenous variables like in Bellone et al. (2000), Greene et al. (2011), and Dawkins et al. (2022) and will not be explored in this paper.

As a consequence of the spatial independence assumption in Sect. 2.2, the conditional likelihood of the MRO at date n is given by

This probability depends only on n by the corresponding day t. This assumption forces the model to learn spatial features (and spatial dependence) through the hidden states.

Later in this paper, we show (see Fig. B2) that this SHMM produces, in general, shorter dry or wet spells than the ones observed, suggesting that the Markov dynamics of the weather regime Z are not enough to stochastically explain the temporal evolution of the MRO Y. Indeed, Z is a weather regime over all of France and does not take into account the local dynamics of rain occurrence , i.e., that in addition to being influenced by the global weather, local weather should also be dependent on the local previous day's MRO . Hence, it makes sense to define the dynamics of the MRO conditional on several previous days. This is the raison-d'être of the next models 𝒞m and m>0.

We end this section with another set of remarks considering our model assumptions, which will also apply to 𝒞m>0 models.

-

The conditional independence hypothesis in Sect. 2.2 is discussed in Sect. 2.6.

-

Note that conditional independence does not imply independence between stations. The model will learn, through the hidden states, “long-range correlation”, whereas conditional independence will mean that there is no short-range correlation. The actual correlations between the selected stations can be seen in Fig. 13 for MRO and range between 0 and ≃0.5.

-

In Hughes and Guttorp (1994b, Fig. 3), the pairwise correlation conditional on hidden states (and synoptic forcing) is shown to decrease very quickly with distance. Typically, the characteristic decay length is around 50 km for most station pairs.

2.3 Seasonal hierarchical hidden Markov model (SHHMM), model 𝒞m with m>0

To better reproduce the dry and wet spell distributions, we consider additional local conditioning. Different lengths of this additional local conditioning will correspond to different models 𝒞m (with some memory parameter ). Intuitively, models with history 𝒞m>0 should display better temporal persistence than the 𝒞0 model' i.e., consecutive day sequence statistics should be replicated better. On the other hand, these models 𝒞m>0 require more parameters to be fitted for the same number of data, and thus one should expect statistically less accurate estimates if m is too large.

Given m>0, we introduce the history variable

and its local analog . The following hypothesis 𝒞m summarizes the model.

- (H-𝒞m)

-

Z evolves as a seasonal Markov chain with period T=366. Conditional on the hidden variable and the local history H(n), the spatial components are independent, and, furthermore, the conditional distribution of each only depends on Z(n) and . This distribution is a Bernoulli distribution describing the probability of rain at a station s and date n, conditional on Z(n)=k and : it is denoted as , assumed to be T-periodic, and represented as

for some parameters depending only on n by the associated day t, the hidden state k, and the m previous days' observation value hs at the station s.

As a consequence, and similarly to Eq. (5),

The 𝒞m>0 models are defined by , where the law of the first observations , where is added.

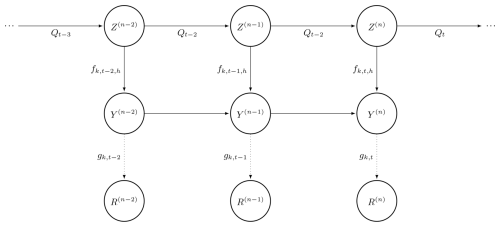

Regarding the usual terminology of hidden Markov chains, the model 𝒞0 is a standard (periodic) HMM (Cappé et al., 2005, Sect. 2.2) since the observed variables are independent given the hidden variables . For other models , because of the dependence with respect to previous days through , we are rather in the presence of autoregressive HMMs as described in Kirshner (2005, Sect. 3.1.1) (also discussed in Cappé et al., 2005, Sect. 2.2.3, under the name hierarchical HMMs): conditional on , the MRO process evolves as a Markov chain with memory m. This is a significant difference from other precipitation models in the literature, such as Touron (2019a), Holsclaw et al. (2016), and Kroiz et al. (2020).

In the remainder of the article, we will use the term seasonal hierarchical hidden Markov model (SHHMM) to refer to the model 𝒞m>0. Note that we will also use the same term to describe the full model, i.e., 𝒞m>0 with added rainfall amounts (see Sect. 6).

We illustrate the 𝒞m=1 model in Fig. 3.

Note that a time-independent autoregressive HMM was proposed in the PhD work of Kirshner (2005, Sect. 6.1.1) as a promising option but, to the best of our knowledge, has not been explored further. However, the combination of HMM with local memory, seasonality (see Sect. 2.4), and the subsequent addition of rainfall amount (see Sect. 6) appears to be new.

2.4 Hypothesis and modeling of the time regularity of parameters

The previous models 𝒞0 and 𝒞m>0 depend on the T-periodic functions . A quick inspection of the number of scalar parameters to estimate on each day t∈𝒯 gives

-

K(K−1) coefficients for the transition matrix Qt and

-

coefficients for the Bernoulli distribution parameter for all and hs.

For S=10, K=4, and m=1, it gives 92 scalar parameters, which is larger than the number of available data at each day t (64 for usual days and 16 for 29 February). On the one hand, estimating the parameters by maximizing the observed likelihood independently at each day t∈𝒯 is conceptually simple. On the other hand, the estimated parameters would suffer from high variance as there are too few data at each day t. Therefore, in the inference procedure that will be exposed in Sect. 3, a time regularity constraint will be imposed. This procedure (detailed later) will be essential to recover interpretable and meaningful results.

Let us argue in more detail. Intuitively, the timescale of variation of the model parameters should be of the order of magnitude of a month (30 d). Hence, once fitted, the parameters should evolve as a smooth function of day t. The advantages of imposing a smoothing are multiple:

-

This avoids unrealistic, erratic day-to-day changes in the parameters while allowing for a physically realistic seasonal evolution.

-

It helps to overcome the lack of data at each day t; indeed, the smoothing implies that the data from neighboring days are accounted for when making an inference at day t.

-

In terms of identifiability of the model, it is well-known that HMMs are identifiable up to relabeling of the hidden states. In the case of SHMM, the model is not identifiable up to the relabeling of hidden states at each day t (Touron, 2019a). Thus, it is very likely that a naive likelihood optimization routine gives quite different parameters on consecutive days, whereas for obvious interpretability reasons, we seek a smooth evolution as a function of the day t of the calendar year.

A popular choice in the literature is to use trigonometric polynomials (Langrock and Zucchini, 2011; Papastamatiou et al., 2018; Touron, 2019b) to parameterize the parameters as a function of the day t∈𝒯 (see Eqs. 8–9b below) and directly infer new parameters. The final SHMM or SHHMM is then only identifiable up to a global relabeling common to all t. Other methods, such as cyclic penalized splines (Feldmann et al., 2023; Dawkins et al., 2022), could have been considered. Thus, each parameter is composed with the trigonometric polynomial as follows: given some coefficients , set

for some degree Deg. For all k∈𝒦 the transition matrices are given by

and the Bernoulli parameters in Eqs. (4)–(6) by

The parameterization of Qt corresponds to the log-ratio transformation well-known in compositional data analysis (Pawlowsky-Glahn and Buccianti, 2011). These definitions ensure , and , , and . A model with a high degree Deg will be able to capture shorter and shorter sub-seasonal/monthly/sub-monthly phenomena.

A quick inspection of the number of parameters (the coefficients c) gives (for S=10, K=4, m=1, and Deg=1 corresponding to roughly four seasons) scalar parameters (for all t∈𝒯) instead of in the previous day-by-day parameterization. The gain is quite significant. However, the maximization step has no analytical solutions: the subsequent numerical optimization is heavy because now is not independent with respect to t∈𝒯. The resulting parametric problem is of lower dimensions but more complex to solve than the T individual problems.

In the rest of the paper, we denote by θ all the coefficients appearing in Eqs. (8)–(9b), and those are to be optimized:

2.5 Identifiability

For the inference problem to make sense, the model must be identifiable. Latent models are known to be only identifiable up to label swapping. Moreover, Bernoulli mixtures are known to be non-identifiable (Gyllenberg et al., 1994). However, they are identifiable under a weaker notion of generic identifiability up to label swapping if the following condition holds (Allman et al., 2009, Corollary 5):

Generically identifiable (Allman et al., 2009) implies in particular that the set of points for which identifiability does not hold has measure zero. Hence, for the applications, this notion is enough. For our application, we explore K being at most 8 so that S≥7.

In Touron (2019a, Theorem 1), the identifiability up to label swapping of the seasonal hidden Markov model is proven under the following assumptions.

-

For , the transition matrices are invertible and irreducible.

-

The matrix is ergodic, and its unique stationary distribution ξ∗ is the distribution of Z(1).

-

For each , the K distributions are linearly independent.

The star (∗) denotes the set of true parameters. The irreducibility and ergodicity are satisfied under the parametric assumption for Qt since all the matrix coefficients are strictly positive. The invertibility of Qt is proven to hold up to a negligible set of parameters (Touron, 2019a, Sect. 2.4.1) for our parametric choice. The second condition can be shown using the coefficients of Qt as strictly positive, so those of are also, and therefore is irreducible and aperiodic. To prove that the third assumption is satisfied in our case, we use the equivalence (Yakowitz and Spragins, 1968, Theorem Sect. 3) between linear independence of K distributions (νk)k∈𝒦 and the identifiability of the mixture for some weights (wk)k∈𝒦. Together with the condition in Eq. (11), it follows that the model 𝒞m=0 is generically identifiable up to a global relabeling. For higher-order models 𝒞m>0, the local memory (autoregressive structure) of the distribution prevents direct application of the previous results; however, one can reasonably expect a similar condition to hold.

2.6 Model justification

We end the modeling section with a discussion on our choice of having inferred weather regimes using rain occurrences only.

Training hidden state models with binary variables such as wet/dry is well-established in machine learning classification techniques (see Bishop, 2006). Hence, rather than using a complex distribution (rain amount) in the HMM, we first focus on discrete rain occurrences, as in a Bernoulli mixture. Discrete distributions might seem like a simplification compared to existing methods, where rain amounts are directly expressed as a mixture of an atomic and continuous distribution (Touron, 2019a) or modeled using censored Gaussian distributions (Ailliot et al., 2009; Baxevani and Lennartsson, 2015), or in the context of Markov switching models where complex weather variables are modeled (Ailliot and Monbet, 2012; Ailliot et al., 2015b; Monbet and Ailliot, 2017; Ailliot et al., 2020). However, it can be argued that rain occurrences and amounts are very distinct processes with different statistical properties (Wilks, 1998; Dunn, 2004; Yang et al., 2019). For example, Vaittinada Ayar et al. (2020) use a spatial censored latent Gaussian model (conditioned on predefined weather regimes, but that is not the point) with the rain amount R directly. Hence, it assumes the same spatial correlation coefficient for the variables R>0 and Y. Similarly, while in our model we induce autoregressive Markov local memory for rain occurrences Y, their model assumes temporal memory using a MAR(1) model for R.

As mentioned previously, attempts to directly include spatial rain amounts within the hidden states have not been completely satisfactory in terms of learned correlations (e.g., Kroiz et al., 2020, Fig. 1, Ailliot et al., 2009, Model Cγ, or Holsclaw et al., 2016), where no correlation check seems to have been performed. Our approach produces fully interpretable hidden states that are relevant not only for rain occurrence but also for other variables such as rain amounts and mean sea level pressure. This is made possible by our assumption described in Sect. 2.2, which is both a strength and a limitation of the model: it requires a sparse station distribution but forces the hidden states to learn spatial patterns with temporal Markov dependence. Hence, at smaller scales (or for a denser station distribution), this assumption might not hold, and other, often less interpretable, methods may be required.

In fact, we argue that more complex HMMs can be increasingly difficult to train and lack interpretability. See Pohle et al. (2017) and de Chaumaray et al. (2023) for discussions on how imperfect parametric distributions can, for example, lead to an overestimation of the number of hidden states. For instance, extreme precipitation events often fall outside the reach of standard parametric rain distributions and could affect the weather regimes. Hence, learning hidden states directly from rain amounts might affect their quality. Moreover, a higher number of states could be necessary, but this would come at the expense of robustness since they are identified from the same amount of data. The choice of Bernoulli distributions for binary variables is, however, exact, suggesting that our model will likely pick a smaller number of hidden states, i.e., more interpretable.

Moreover, we also argue that breaking conditional independence, as in Hughes and Guttorp (1994b) and Kirshner et al. (2004) (which are the only two attempts we found in that direction), must be done carefully, as it complicates model identification. Specifically, spatial correlation can either be learned by the hidden states or by the added correlation structure. The proportion of dependence captured by each component is not explicitly controlled, and in some cases, all correlations may be learned through the additional correlation structure, rendering the hidden states irrelevant. Enforcing conditional independence in our model ensures that all spatial dependencies are learned exclusively by the hidden states and is validated a posteriori (see Sect. 5.2.2). In addition, the complexity of models like that in Hughes and Guttorp (1994b) is such that it is not clear how many more stations could be added (with respect to the conditional independence model) before reaching computational limits.

In Touron (2019a), the maximum likelihood estimator is shown to be a consistent estimator for the seasonal HMM, i.e., 𝒞m=0. Proving the consistency for the autoregressive model 𝒞m>0 is outside the scope of this paper; however, we will still use the maximum likelihood estimator to infer the model parameters.

Maximizing the likelihood of a latent model is usually done with the expectation maximization (EM) algorithm. See McLachlan and Krishnan (2007) for a general review of the EM algorithm and its extensions. To maximize the log-likelihood of the SHHMM, we will use a heterogeneous version of the Baum–Welch algorithm, which is a special kind of EM algorithm for hidden Markov models. The details of the algorithm can be found in Appendix E. Note that in this paper, we do not consider Bayesian inference as in Stoner and Economou (2020) and Verdin et al. (2019). Hence, the estimated parameters will be deterministic, and the resulting SWG model will solely be responsible for the climate variability, i.e., the uncertainty in the parameters' estimation will not be accounted for. A known issue of EM algorithms is that they can converge to local maxima. As we will illustrate, a naive random initialization of the algorithm without a good guess will likely land in some meaningless local maxima – even if multiple random initial conditions are tried – and/or take a very long time to converge.

Hence, before fitting SHHMM with the Baum–Welch algorithm, we will first find a crude estimator of the SHHMM by solving many simpler subproblems by using the procedure described below.

3.1 Initialization: the slice estimate

The idea is to first treat the MRO observations of each day of the year t∈𝒯 separately. On each day t, the distributions form a mixture model that can be fitted with a standard EM algorithm. Once this is done, we relabel the hidden state at each day t to ensure some continuity in the estimated parameters . Finally, by identifying the most likely a posteriori states on each date n, we obtain an estimated sequence, , which we use to fit the transition matrices . The whole procedure is described in Appendix F. In Appendix F7, we show the gain in terms of likelihood and number of iterations when using the slice estimate compared to random initialization.

3.2 Baum–Welch algorithm for SHHMM

In the previous section, we provide an estimated SHHMM that we will use as a starting point in the Baum–Welch algorithm. The algorithm alternates between estimation (E) and maximization (M) steps to converge to a local maximum of the observed likelihood defined for the SHHMM with m≥1 (see Sect. 2.3) by

where for sake of simplicity we assume that h1 is known so that . Note that this is the case in practice, as we have a few extra days of data to define h1. We briefly detail each step of the EM algorithm in Algorithm 1, and more details can be found in Appendix E.

Algorithm 1EM algorithm for SHHMM 𝒞m.

Note that at M-step, the maximization can be done independently for the transition matrices and the distributions of the observations (and initial distributions). However, since we enforce the coefficients θ(i) as periodic functions of the day of the year t, the maximization step cannot be done explicitly even for a simple Bernoulli distribution and is thus done numerically.

In all our numerical applications, the stopping criterion is . The log-likelihood at convergence is typically for the settings K=4, m=1, Deg=1, and the historical data , i.e., . We also check that this stopping criterion is relevant for the θ parameters as we have , where the max is taken as the largest difference between two iterations over all the parameters θ in Eq. (10).

To avoid being trapped in a local minimum, we run the algorithm 10 times with initial conditions randomized around the initial state θ(0) provided in Sect. 3.1; see Appendix F6 for more details. We then select the maximum likelihood amongst the different runs.

3.3 Hidden state inference: the Viterbi algorithm

Once the SHHMM parameters are found , the most likely hidden states given the observed data sequence , i.e.,

can be inferred with the Viterbi algorithm (Viterbi, 1967). In this algorithm, to estimate Eq. (13), for n∈𝒟 and k∈𝒦, the quantity

is estimated recursively. For a homogeneous HMM with no local memory, Eq. (14) is simply

See Viterbi (1967). For an SHHMM Eq. (14) is

This can be shown by a straightforward adaptation of the original proof.

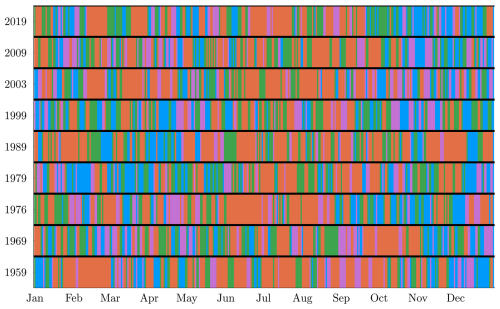

This algorithm provides a very efficient way to decode the whole hidden state sequence corresponding to the observations, allowing us to match historical weather events to hidden state sequences. This is illustrated in Sect. 4.4.

3.4 Model selection

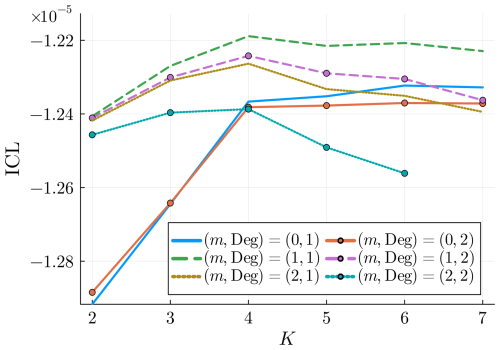

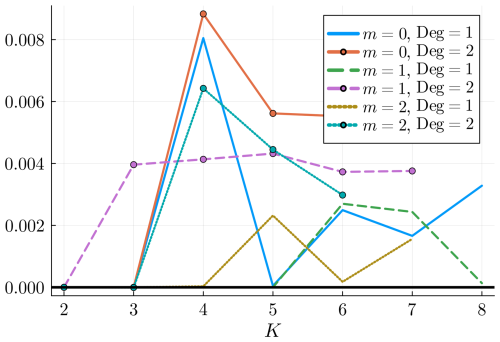

We introduced three hyperparameters to our model: the local memory length , the number of hidden states (weather regimes) , and the degree of the trigonometric expansion in Eq. (8). In particular, the number of hidden states K must be large enough to reproduce spatial correlations but low enough to avoid overfitting and loss of interpretability. In this model, we fix m and Deg to be the same for all stations and variables.

In the literature, several methods have been used to assess the best hyperparameters of HMMs, information criterion coefficients like the Bayesian information criterion (BIC), and cross-validation; see de Chaumaray et al. (2023), and references therein. From a theoretical point of view, no result guarantees the quality of these estimators for SHHMM. To select the hyperparameter K, we use the integrated complete-data likelihood (ICL) criterion, as it favors nonoverlapping hidden states and shows better empirical performance with HMM than other model selection methods (Celeux and Durand, 2008; Pohle et al., 2017). It is defined as , which is not accessible in practice. The estimate

uses the fitted parameter and the decoded Viterbi most likely hidden state sequence . The ICL is then computed as

The optimal set is obtained by maximizing . In Fig. 4, we see that , and Deg=1 maximize the ICL. Hence, for the rest of the paper, unless specified otherwise, we will choose these parameters. Note that in Robertson et al. (2004), K=4 hidden states were also found for northeast Brazil using cross-validation. Note that, in principle, we could use different ms at each station s∈𝒮, as well as different degrees Degs for each type of variable and station (transition matrix coefficient, Bernoulli parameter, etc.). We tested configurations where some stations had a larger local memory (ms=2 or 3), but this consistently resulted in a lower ICL. This suggests that while the ICL criterion is well-suited for selecting the number of states K, it may not be optimal for choosing other hyperparameters, as some stations, such as La Hague, show signs of higher-order temporal dependence (see Figs. 11 and 12). Alternative criteria such as BIC (Katz, 1981) or parsimonious higher-order Markov models (e.g., Raftery, 1985) might be considered. For the remainder of the paper, we fix m=1 at all stations.

3.5 Comparison with multisite WGEN-type models

In this section, we introduce another multisite rain occurrence model that will be used for comparison. This model was first proposed by Wilks (1998) using first-order Markov models to simulate rain occurrence with a Gaussian latent model to generate spatially correlated amounts. Srikanthan and Pegram (2009) later extended it to fourth-order Markov models to better reproduce dry/wet spell distributions. This class of weather generators is also referred to as WGEN-type models (Nguyen et al., 2023) and is typically used alongside rainfall amount models (e.g., Evin et al., 2018). Note that in the literature, the acronym WGEN has also been used to refer to other models. Seasonality is accounted for by assuming that the parameters remain constant within each month.

Mathematically, at each station s∈𝒮 and for a month , rain occurrence follows a Markov model of order mW, with transition probabilities given by

where is the history variable of order mW, introduced in Sect. 2.3.

The multisite correlations are modeled using an unobserved Gaussian process U. At each day n∈𝒟 and for a given month ,

where , and is an S×S positive-definite correlation matrix.

The rain occurrence at site s is determined by the value of . Given a history and the Bernoulli probability ,

where Φ−1 is the quantile function of the standard normal distribution.

As in Srikanthan and Pegram (2009) and Evin et al. (2018), we set the Markov model order to mW=4. The correlation matrix is estimated following the previous references by simulating each site pair for each month to determine that yields the observed correlations, where 𝒟mth is the set of all days in month mth.

The model is fitted, yielding parameters for the Markov chains and for the correlation matrices.

The biggest advantage of this model is that it is not limited by the conditional independence hypothesis; i.e., stations can be as close or as far apart as needed. Moreover, the fitting procedure is slightly simpler, as no expectation maximization algorithm is required. The seasonality treatment differs slightly, as WGEN-type models assume parameters to be constant per month, while in our setting, they evolve smoothly throughout the year. Ignoring this minor difference, the complexity of this model is significantly greater than ours. The number of correlation coefficients grows as ∼S2, whereas our model scales as ∼K2 for the spatial part, with typically K≪S. Thus, while our model is limited in the number of stations due to the conditional independence assumption, WGEN-type models may be constrained in practice by computational complexity. Moreover, as we will show, setting our local history to m=1 provides good results in general, whereas WGEN-type models typically require larger mW values to adequately reproduce dry/wet sequences (see Sect. 5.2.1). This suggests that part of the temporal dependency is captured by the hidden states, simplifying the local Markov models. Additionally, large-scale dry spells will not be accurately represented, as described in Sect. 5.3. This is not entirely surprising, as WGEN-type models only account for pairwise correlations. Note that higher orders could, in principle, be added at a much greater computational cost.

One of the main messages of this paper is to show that the resulting hidden states are fully interpretable, both spatially and temporally. In particular, forcing conditional independence (see Eqs. 5 and 7) forces all spatial correlations to be in the hidden states.

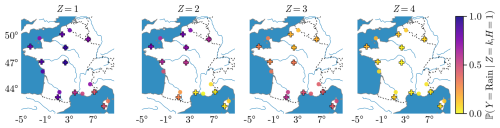

The discrete latent variable Z∈𝒦 used here corresponds to weather regimes, sometimes referred to as weather types or patterns, which represent a finite set of possible atmospheric states acting as quasi-stationary, persistent, and recurrent large-scale flow patterns. They are commonly used in weather generators to characterize the daily atmospheric circulation (e.g., Garavaglia et al., 2010), which influences the values of the generated variables at the daily timescale. Various methods exist for identifying these weather regimes, with hidden Markov models being one such approach. In our case, these hidden states are not constrained by any external variables and will be interpreted as specialized weather states for France. To our knowledge, no previous approach using spatial HMM has been applied to infer and interpret weather regimes over France (or western Europe). Generally, only a few attempts (e.g., Robertson et al., 2004, Sect. 4) identify and interpret weather regimes without using exogenous variables.

We describe in this section different points of view to give a sense of these hidden states that we also refer to as weather regimes. In the following, all plots and interpretations are done for the model 𝒞m=1 with K=4 and Deg=1, which was the model selected in Sect. 3.4.

4.1 Spatial features

The hidden states have been introduced to give correlated rain events across France. Hence, we expect the hidden states to form some spatial patterns specific to French weather; typically, the south is generally drier than the north.

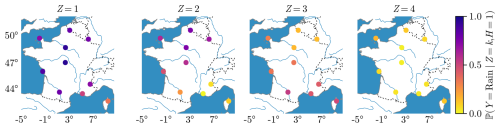

4.1.1 Rain probability

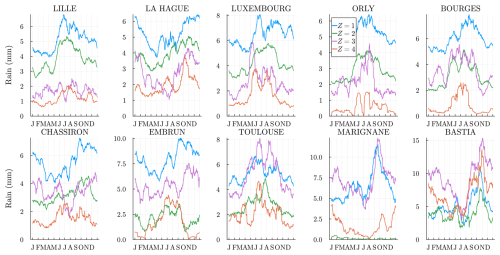

In Fig. 5, we show the rain probability given the hidden state k and that the previous day was dry, averaged over the year. The Z=1 state corresponds to a high probability of rain over all of France, Z=2 corresponds to a rainy climate in the north and drier in the south, and Z=3 is more or less the opposite, while in the state Z=4 the probabilities of rain are low all over France. The trained model satisfactorily recovers known regional features of the French climate. For higher-order models K>4, the spatial features are more and more specific to peculiar regimes, e.g., rainy only in Bastia. It can also be a signal of overfitting.

4.1.2 North Atlantic pressure maps

To interpret the model beyond the stations it was trained on and beyond the rain occurrence variable, we will look at the hidden states in terms of weather patterns over the North Atlantic with pressure maps. This is a common practice in climatology and is used to classify weather patterns. For example, the four North Atlantic weather regimes are large-scale weather regimes over the North Atlantic Ocean responsible for most of the climate variability (Woollings et al., 2010). There are various definitions of the regimes of the North Atlantic weather, and it is typically done using clustering methods such as K-means on the daily maps of anomalous 500 hPa geopotential height (e.g., van der Wiel et al., 2019). Note that, as noted in Garavaglia et al. (2010), “it is almost impossible to assert that a given classification is the best” – hence, the comparisons presented in this section are mostly qualitative, as our model classifies weather regimes using only rain occurrence, while other weather variables or classification techniques would yield different regimes.

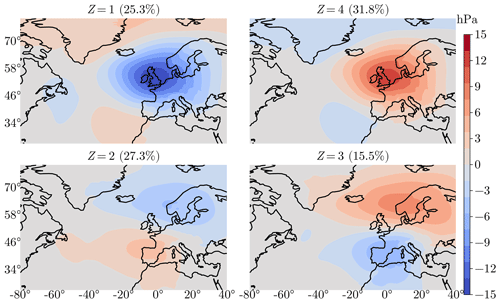

In Fig. 6, we show how the weather regimes are relevant in terms of pressure maps. We consider the mean sea level pressure (MSP) from the reanalysis ERA5 hourly data on single levels (Hersbach et al., 2020) from 1979 to 2017. The pressure map is averaged over all winter days conditional on the hidden state (inferred before via the Viterbi algorithm (see Sect. 3.3), giving a pressure anomaly map at each longitude and latitude. The geographical area where the pressure maps are computed is and . It is much larger than France and corresponds roughly to the North Atlantic area. The results are shown in Fig. 6.

Figure 6Winter (December–January–February) mean sea level pressure (Pa) anomalies (difference) between the average of all winter days in Z=k state and the average of all winter days. The relative frequency of occurrence of each state during winter in the historical data is shown in parentheses.

The four maps clearly show four distinct regimes with different pressure anomalies. It is remarkable that coherent large-scale structures over mean sea level pressure are found with a model only trained over S=10 stations, all located in France, with rain occurrences as training data.

As a first comparison, we consider the four Euro-Atlantic regimes defined in Cassou (2004, Fig. 7). We display the same differential pressure map as they do over the winter months for a similar spatial domain. In Cassou (2004) and van der Wiel et al. (2019), the four regimes are NAO−, Atlantic Ridge, blocking, and NAO+. The two NAO regimes correspond to the reinforcement or attenuation of the Icelandic low and Azores high, leading to a strengthened or weakened westerly flow over France. The two other regimes correspond to different deviations of this flow, having different consequences for French weather, depending on the season.

In our model selection, we found the same number of hidden states K=4; see Sect. 3.4. If to some extent the states defined by the SHHMM (Fig. 6) look similar to the Euro-Atlantic regimes (see Cassou, 2004, Fig. 7) in terms of the order of magnitude of the mean pressure anomalies (∼10 hPa) and patterns, e.g., Z=4 can be seen as a blocking-like pattern.

However, a close inspection shows important differences: the structures we found are more centered toward France and slightly more intense. This is to be expected as the training data are only in France. A fairer comparison can be found with Boé and Terray (2008), where the weather types (WTs) found with rain amounts in France are also coherent with ours and easier to compare as they represent, like us, the MSP anomalies. They define eight WTs over extended winter months (November to March). WT2 (25 % relative frequency of occurrence) is very close to our Z=4 (31.8 %) in terms of pattern and amplitude, even far outside France. WT6 (12.5 %) is also very close to our Z=3 (15.5 %), i.e., a milder depression centered on the Azores and an anticyclone centered in northern Europe. The other two rainier hidden states Z=1 and Z=2 probably cannot be viewed as simple combinations of the remaining WTs. This is consistent with Boé and Terray (2008), who use rainfall amounts for clustering.

In Garavaglia et al. (2010), the weather patterns (WPs) are found using stations mostly located in the southeast of France and a more complex rain variable. Note that their main figure (Fig. 3) shows the WP geopotential height at 1000hPa averaged all year long, making the comparison with our Fig. 6 harder. Their anticyclonic state (WP8) (25 %) is close to our Z=4, with a pressure high over the north of France and south of Great Britain.

4.2 Seasonality

The SHHMM's transition matrix and distributions have periodic coefficients varying across the year. A consequence is that the hidden states are not fixed in time but can also vary. We expect variations to be smooth enough so that weather regime Z=k has a similar interpretation during the whole year.

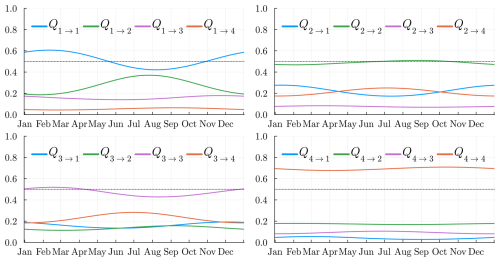

4.2.1 Transition matrix

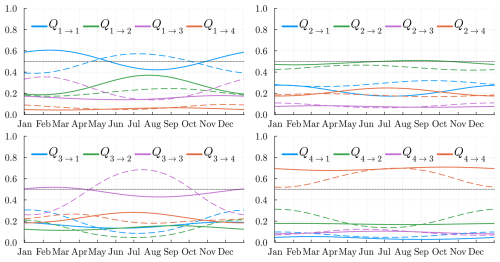

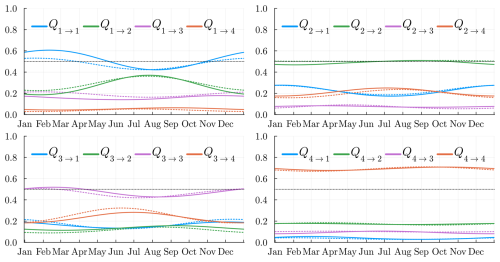

We display, in Fig. 7, the 16 coefficients of the transition matrix Qt. The “dry” state Z=4 is the state where the probability of staying in the same state is the highest. Hence, we expect longer global dry sequences than the other regimes. The probability of remaining in the same state is the lowest in states Z=2 and Z=3; hence, these can be seen as transitional states. Moreover, state Z=4 has a very low probability of switching directly to state Z=1 (and vice versa), confirming that an intermediate state is required for this to happen. This makes sense with the intuition that a dry day all over the country is rarely followed by a wet day all over France. During some seasons, e.g., summer (June, July, August, September), state Z=2 will prefer to transition to a dry state Z=4 rather than the wet state Z=1. This is the opposite situation in the rest of the year. Again, this is consistent with the fact that during summer we expect the state Z=1 to be less frequent.

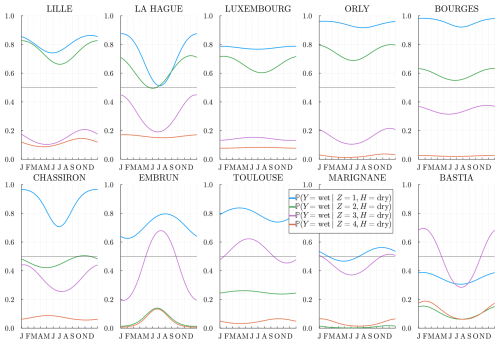

4.2.2 Rain probability

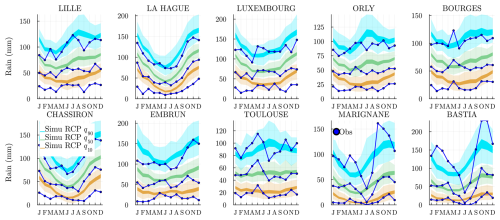

We plot, in Fig. 8, the rain probabilities as a function of the station and climate variable Z=k. In almost all stations, the extreme states Z=1 (4) are where it rains most (least) often. As seen in Fig. 5, states Z=2 and 3 are different in the north and south.

Figure 8Estimated probability for m=1 and h=dry, i.e., the probability of rain at the location s, conditional on the hidden state and on a previous dry day. The stations are sorted by latitude from northernmost (top left) to the southernmost (bottom right).

The success of the SHHMM fit can be observed as, at each station, most states are completely separated all year long. That is, in general, the rain probability conditioned on different states Z does not cross states or become equal, showing meaningful states. When converging to local minima, we would typically observe such state crossings during the year, indicating potential issues in the fit.

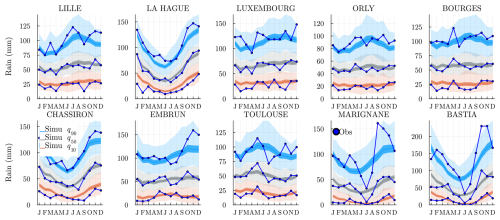

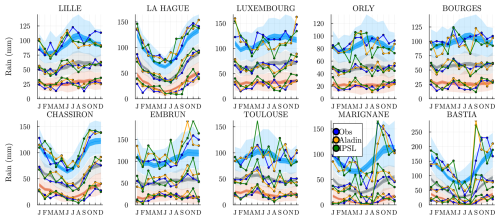

4.3 Mean rain amount

Even if the model training does not involve any rain amounts , the hidden states Z=k should still be meaningful for these. In Fig. 9, we plot the daily mean rain amount for each station and state k. The values obtained are smoothed with a periodic moving average of time window ±15 d; see Appendix D for the definition. The “rainy” weather regime k=1 is the state where it rains the most at almost every location and all year long. Similarly, the “dry” regime k=K is where it rains the least. Interestingly, the intermediate regimes, k=2 and k=3, are rainier in the north in different seasons. Southern stations have a different behavior as expected.

4.4 Weather regime spells

To illustrate the dynamics of the weather regimes, we show in Fig. 10 for different years the Viterbi estimated hidden states (see Sect. 3.3). As previously noticed, dry and wet spells last longer in general than in other states. For historical events such as the drought in the of summer 1976, we observe a long dry sequence (27 d in a row in state Z=4 starting from 3 June). The famous 2003 heatwave from 1 to 15 August also corresponds to a 15 d dry spell.

Now that the model is fully inferred and interpreted, we will test its validity. To do so, we will sample multiple independent and identically distributed (IID) realizations of the training period 1956 to 2019 and compare several spatiotemporal statistics with the historical data.

5.1 Simulation algorithm of the SHHMM

We first sample the hidden states according to the nonhomogeneous periodic transition matrix and initial distribution ξ, and then we draw the MRO from the conditional distributions . The procedure is summarized in Algorithm 2.

Algorithm 2Simulation of the SHHMM

In the simulations, we choose the initial date as 1 January 1956. Our final date is 31 December 2019 so that the total simulated range is 64 years, which corresponds to our dataset span. We choose , i.e., z(1)=1, which is a rainy weather regime, because it was rainy all over France on that day. We assume that the MROs before the first simulation day are observed and use them as input to draw .

The model is lightweight, allowing fast generation – which can easily be parallelized – of many sequences to study climate variability. On a standard computer, generating one 64-year sequence of rain occurrences and hidden states using the StochasticWeatherGenerators.jl package (Métivier, 2024) takes approximately ∼0.01 s.

5.2 Results

In the following, we will use IID. realizations (stochastic simulations) of the SHHMM over a 64-year span and compare its statistics with the 64-year observed sequence and denote by the realizations. When the j is dropped, it refers to the ensemble of all simulations.

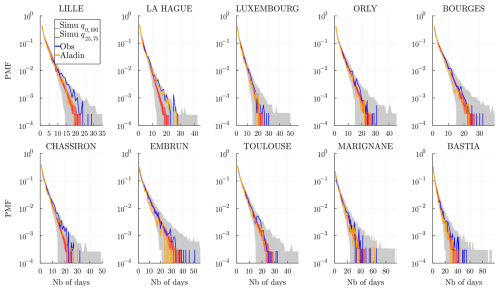

5.2.1 Dry/wet state sequence

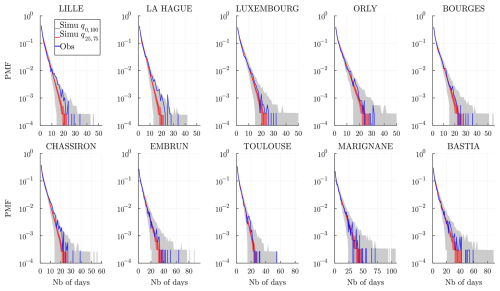

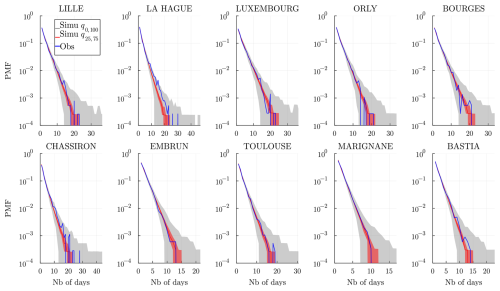

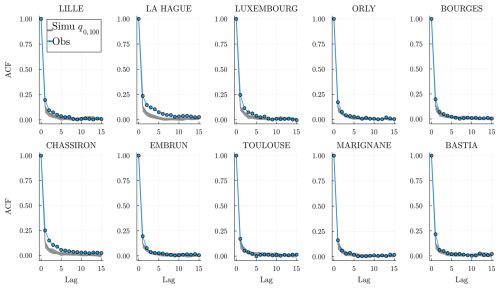

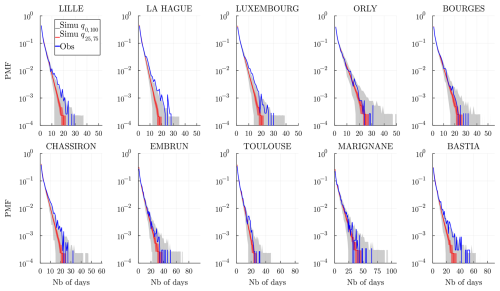

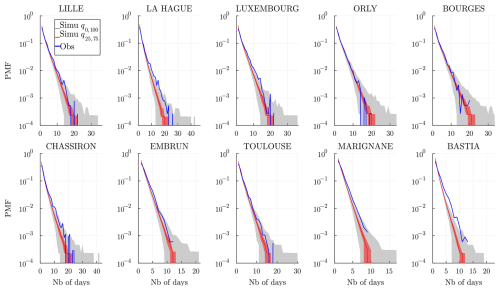

The dry spell sequences are of particular interest to estimate risks associated with droughts. We show the observed dry (wet) spell distributions, i.e., probability mass function (PMF), in Fig. 11 (and Fig. 12) at all the stations and compare them to the simulated spells for the J realization. When the historical distribution is contained in the simulations' envelope, we may conclude that the model does a good job of reproducing the dry (wet) spells: note that this works systematically well, except for La Hague station (bordering the Channel Sea) at a few data points. In general, for Lille, La Hague, and Chassiron, the observed PMFs deviate from the center of the envelope, suggesting that higher m>1 might be required.

Figure 11Dry spell distribution (in number of days) at every station and for a time range 𝒟 of the historical data (blue) and of the J=103 simulated wet spell distribution. The gray envelope covers the full range (q0,100) of the simulations, while the red envelope covers the interquartile range (q25,75), and the line (red) is the median. Simulations are obtained over the same time range 𝒟 and using the model , and 𝒞m=1.

Figure 12Wet spell distribution (in number of days) at every station and for a time range 𝒟 of the historical data (blue) and of the simulated wet spell distribution. The gray envelope covers the full range (q0,100) of the simulations, while the red envelope covers the interquartile range (q25,75), and the line (red) is the median. Simulations are obtained over the same time range 𝒟 and using the model , and 𝒞m=1.

In Appendix B, we show and discuss the distribution obtained using the memoryless 𝒞m=0 model to highlight the gain of the model 𝒞m=1 in both the center and the tails of spell distributions; see Figs. B1 and B2. We note that even though wet spells are in general much shorter than dry spells, having m=1 is necessary to accurately reproduce the wet spells. Note that the WGEN model presented in Sect. 3.5 is also expected, for a sufficiently high order, to perform well for dry/wet spells, as it is trained at each station to learn the temporal dependence of dry/wet sequences.

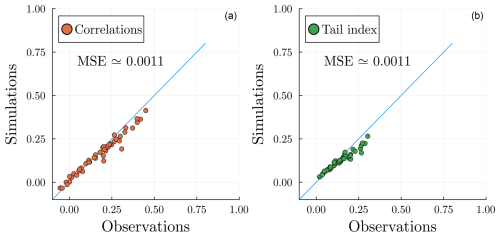

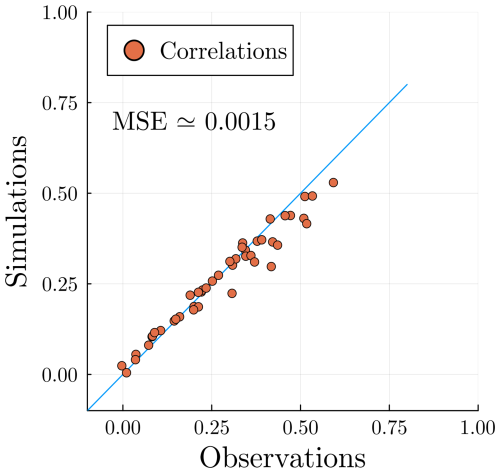

5.2.2 Spatial correlations

We compare in Fig. 13 the observed and simulated correlation coefficients between all sites for all . Most correlations are well-reproduced, showing that the conditional independence hypothesis in Sect. 2.2 (or Sect. 2.3) is valid.

Figure 13 Observed pair correlations for all compared with the correlations computed from the simulations (we average the pair correlations of our simulations). The conditional independence metric MSECI in Eq. (19), is displayed in the figure.

To measure this quantitatively, we define the following conditional independence metric:

The closer to zero, the better the conditional independence hypothesis will be satisfied. This criterion helps compare different choices of stations 𝒮; see Sect. 2.1 and Appendix C for more details. This is the biggest limitation of this work: to produce meaningful hidden states that correctly learn spatial correlations, the MSECI must be small. For example, a pair of stations at the center of Paris and Orly (only ≃13 km apart) would not satisfy the conditional independence hypothesis. Note, however, that the conditional independence between stations is not necessarily isotropic; hence, station configurations with better MSECI are not necessarily those with the largest pairwise station distances.

To give an upper bound to this metric, we trained the model, while keeping seasonality, with K=1 state, i.e., no hidden states (meaning completely independent stations), and found .

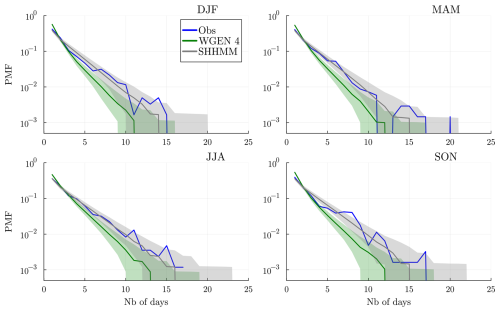

5.3 Spatiotemporal spells

To check the spatiotemporal properties of the model, we can focus on the temporal properties of the following spatial quantity: the rain occurrence rate (ROR). Note that a similar quantity is considered in Baxevani and Lennartsson (2015). On a given day n, it is defined as the fraction of stations above some precipitation threshold Rth,

In general, the precipitation threshold could be made station-dependent, e.g., a given quantile. Such quantities have been used to study large-scale phenomena, e.g., temperature heatwaves (Miloshevich et al., 2023; Cognot et al., 2025). Here, we focus on dry or wet events relevant, for example, for droughts, choosing Rth=0. The previous quantity simplifies to

To evaluate large-scale lasting dry events, we consider spells of ROR(n)≤0.2; i.e., only 20 % or less of the stations are rainy. We show in Fig. 14 the distribution (PMF) of the spells for each season, i.e., December–January–February (DJF), March–April–May (MAM), June–July–August (JJA), and September–October–November (SON). We observe that the SHHMM is able to reproduce short spells as well as the distribution tails for every season. It can even produce longer spells than observed. On the contrary, the WGEN model overestimates short spell durations while underestimating longer spells. This clearly shows that simple correlation models are not adapted to produce correct large-scale weather events, even though they perform well for pairwise correlation and local dry/wet spells. Using censored Gaussian models, Kleiber et al. (2012) and Serinaldi and Kilsby (2014) consider similar quantities but obtain rather poor results. In contrast, Vaittinada Ayar et al. (2020) achieve good results; however, their model is conditioned on synoptic weather regimes and tested on a much smaller area.

Figure 14 Distribution of spells of ROR(n) lower than or equal to 0.2 for each season. We show in blue the observed spells and in gray the simulations obtained with the SHHMM; in green are the simulations obtained with the WGEN model with Markov chains of order 4. In both cases, the gray envelope shows the quantiles q5,95 of the simulations, and the line is the median (q50).

In this section, we attach to the rain occurrence model 𝒞m an add-on: a multisite precipitation amount generator. The procedure is carried out hierarchically, i.e., without modifying or retraining the original model. In fact, other variables such as temperature and solar irradiance could be attached similarly to what will be presented in this section. To do so, one only needs a generator for the new variable, e.g., AR(1) model for temperature, and to allow its parameters to depend on the weather regimes Z(n)=k and to evolve smoothly (as in Sect. 2.4) with the day of the year t. We hypothesize that the new variable has some dependence on both the weather regime and the season. We discussed in Sect. 4 various spatiotemporal interpretations of the weather regime, and thus it makes sense to consider how this global variable is relevant for other weather variables. Hence, the resulting add-on generator should generate a variable at least partially correlated with the original SHHMM. This makes the model very modular, allowing easy extensions without affecting its original performances and interpretations. Figure 9 highlights the rain amount dependence on the weather regime k and seasonality. This principle is applied in this section to build an add-on rainfall generator.

The multisite rain amount (MRA for short) is denoted R(n) as in Eq. (1). Building an MRA generator directly is hard because of the ambivalent probabilistic nature of rain, being neither a discrete nor a continuous variable. Here we can just focus on strictly positive rain amounts R>0 because the SHHMM directly indicates when R=0 or R>0.

To train the rain amount generator, we will use the hidden states found in Sect. 3.3. The schematic of the resulting model is shown in Fig. 15.

Figure 15SHHMM with rain amounts. gk,t denotes the MRA generator with respect to the weather regime k and day of the year t.

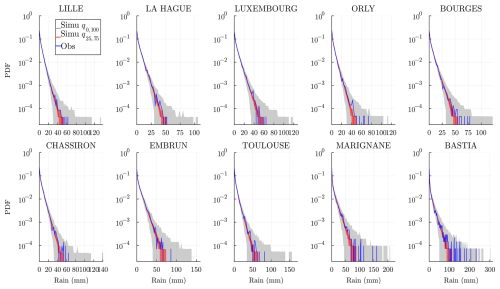

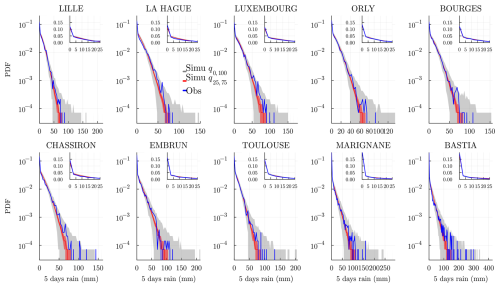

6.1 Marginal rain distributions

The rain amount generator we use to fit the marginal distributions Rs>0 at each station is a mixture g(r) of two exponential distributions, with density